Hi, it’s Alex from 20VC. I’m investing in seed & series A European vertical solutions (vSol) which are industry specific solutions aiming to become industry OS and combining dynamics from SaaS, marketplaces and fintechs. Overlooked is a weekly newsletter about venture capital and vSol. Today, I’m sharing the insightful tech news of September.

Sunday, Sep. 1st: Ramp has released a report analyzing AI spending trends based on the purchasing behavior of its customer base. - Ramp

“In Q2, mean AP spend with AI vendors rose 375% year over year. As expected, these AP expenditures were dominated by OpenAI, with companies spending $181K on average.”

“Anthropic is seeing particularly rapid adoption when we look at the percentage of businesses purchasing AI models on Ramp cards. After hovering at 4% at the start of the year, its market share jumped to 17% in Q2.”

“Our data show customers who started spending with top AI vendors in 2023 are likelier to stick with these vendors than those who started spending in 2022 or 2021—a signal that companies are increasingly convinced of AI's value.”

“Vendors that offer novel approaches to everyday work are becoming commonplace, as companies turn to AI to boost employee productivity.

Suno AI, for instance, lets anyone generate music using simple text prompts.

Limitless runs in the background to capture your audio and screen automatically so you can generate meeting summaries, emails, and more at a later time.

Instantly offers AI for sales engagement and lead intelligence AI.

Cursor makes an elegant AI code editor. All of these vendors are popular with small and large businesses alike.”

Monday, Sep. 2nd: Marie Brayer explored LLMs’ adoption in enterprises. - SOTA 1, SOTA 2

There are currently three main enterprise use-cases: (i) transforming human language into computer actions (e.g. streamlining customer support, reducing admin tasks, removing the need for complex interfaces), (ii) helping people make sense of information overload and (iii) helping humans to produce content at a faster pace.

Today, people are using open-source because they want control and customisability but next year, cost will be the main reason to pick open source when people realize how much scaled LLM pipelines cost.

“However, seeing how many non-” pure-player” companies will keep investing 9 figures+ into training models in the long term will be interesting, as the game is a bit more expensive than traditional open-source.”

“Historical data & ML platforms that already have access to enterprise data seem ideally positioned to train or help fine-tune models that could sweep the market. However, no killer use case or feature has added a clear line to their P&L yet.”

“Whereas overall sentiment towards LLMs is excitement about the potential, the reality is that, except for a few determined use cases in high-tech companies (startups & software vendors), the market has not successfully yet produced clear wins that directly translate to an increase in P&L.”

“The limits of LLM technology are overall very misunderstood even by seasoned data scientists because this field is brand new; plus, most of the LLM science is not mature. For example, getting RAG to deliver something other than a disappointing result is “a dark art.” A lot of people who get “33% accuracy” on their pilot don’t measure how difficult (or impossible) it is to reach 99% accuracy with the current models.”

“The business model of an LLM pipeline is not a given, as the system can cost 1 to 10 euros per inference request (read that again) or more in compute or API costs for complex use cases. It’s not as cheap as running “normal data science” pipelines where the only cost is the team. Everything from finetuning to inference is expensive. Massive ROI needs to be present to justify the cost.”

“[LLMs are used] notably of their vision capabilities, to empower forms of RPA (robotic process automation).”

“LLMs helped Document processors build more robust, higher-value use cases and might be killing the game.”

UiPath is trying to incorporate LLMs into its platform via its seed investment into H. “H’s goal is to develop a large action model to make LLM-based RPA a reality and deliver it to UIPath’s customers through a tight-nit partnership.”

ServiceNow acquired ElementAI for $500m to integrate AI into its platform. “The tier one AI team, created from the ElementAI acquisition, delivered on many fronts:

an impressive collaboration with Huggingface called BigCode, that led to the training of one of the best code generation model families,

a bunch of AI-powered internal AI tools and improvements, customer-facing or not,

a smart chatbot, “Now Assist,” was released in Nov. 2023. You can think about it as a Copilot-Chatbot for all the functions supported by ServiceNow.”

“LLM in the Enterprise is in the pilot phase for now, and the very high expectations of AI doing complex stuff face scientific and technical blockers before being delivered.”

Tuesday, Sep. 3rd: Dan Shipper wrote about OpenAI’s new Strawberry model. - Chain of Thought

“OpenAI has created a new model called Strawberry that could represent a major leap forward in its ability to reason.”

“It appears to be a language model with significantly better reasoning abilities than current frontier models. This makes it better at solving problems that it hasn’t seen before, and less likely to hallucinate and make weird reasoning mistakes.”

“Process supervision means that during training, a model is rewarded for correctly moving through each reasoning step that will lead it to the answer. By comparison, most of today’s language models are trained via “outcome supervision.” They’re only rewarded if they get the answer right.”

“OpenAI originally developed Strawberry to create training data for its newest foundation model, codenamed Orion. Strawberry can be prompted to generate a vast custom training set of problems with step-by-step solutions that Orion can learn to solve and, therefore, hopefully hallucinate less when it encounters similar problems in the wild.”

“While Strawberry was originally built to create training data, OpenAI has plans to release a smaller, faster version of it as a part of ChatGPT as soon as this fall, potentially representing a major upgrade to the LLM’s current reasoning abilities.”

“OpenAI has chosen to use Strawberry to generate more synthetic data of a certain type: logic and reasoning problems, and their solutions.”

Wednesday, Sep. 4th: Meta shared data on Llama’s usage trends and practical applications. - Meta

Llama’s open-source nature has spurred rapid adoption, with 350m downloads on Hugging Face. Companies like AT&T, DoorDash, and Goldman Sachs are integrating Llama for AI-driven innovation.

“Accenture is using Llama 3.1 to build a custom LLM for ESG reporting that they expect to improve productivity by 70% and quality by 20 – 30%, compared with the company’s existing way of generating Accenture’s annual ESG report.”

“DoorDash uses Llama to streamline and accelerate daily tasks for its software engineers, such as leveraging its internal knowledge base to answer complex questions for the team and delivering actionable pull request reviews to improve its codebase.”

“Shopify is continuing to experiment with best-in-class open source models, including LLaVA, which is built on the foundations of Llama. They use finetunes of LLaVA for multiple specialized tasks and are currently doing 40M – 60M Llava inferences per day supporting the company’s work on product metadata and enrichment.”

“Llama models are approaching 350 million downloads to date (more than 10x the downloads compared to this time last year), and they were downloaded more than 20 million times in the last month alone, making Llama the leading open source model family.”

“Monthly usage (token volume) of Llama grew 10x from January to July 2024 for some of our largest cloud service providers.”

Thursday, Sep. 5th: Ikea is adapting to changing consumer habits by expanding e-commerce, smaller urban stores, and sustainability efforts. Online sales now make up 23% of its total, and new “planning centres” and stores in cities like New York and London aim to bring Ikea closer to customers. A key initiative is its second-hand furniture platform, allowing customers to resell Ikea products with Ikea-provided pricing and instructions. This move supports both sales and environmental goals, as the company works to reduce waste and carbon emissions while promoting sustainability. - The Economist

“IKEA products already account for around a third of second-hand-furniture sales by volume.”

“Since 2016 the company has expanded its sales by a third but reduced carbon emissions from its operations by half.”

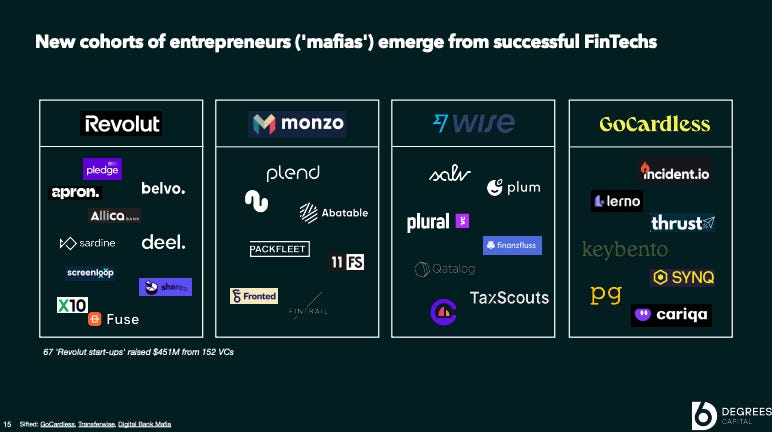

Friday, Sep. 6th: 6 Degrees Capital shared a presentation on the UK’s fintech ecosystem. - 6 Degrees

Saturday, Sep. 7th: Fidelity wrote about the wealth management industry. - Fidelity

“In the United States alone, an estimated $84tn will change hands, primarily moving from Baby Boomers to Millennials and Gen Z.”

“Many traditional wealth management firms are caught at a crossroads, struggling with legacy technology, and ever evolving client expectations. These cumbersome systems are expensive to maintain and lack the flexibility needed to keep pace with modern market demands. Today, nearly 50% of wealth management firms report that their technology platforms are not fully integrated. This lack of integration leads to inefficiencies, higher operational costs, and ultimately higher fees for clients. Additionally, these outdated systems often rely on manual processing, limiting scalability and slowing down service delivery.”

“The number of people aged 65+ in the US is projected to rise from 60 million today to 100 million by 2060 (U.S. **Census Bureau’s Population Projections). This demographic shift presents significant opportunities to develop digital-first solutions for a tech-savvy generation entering retirement.”

“Mid-market wealth managers and family offices have traditionally relied on a missmash of legacy platforms to perform their administrative back and middle office tasks such as portfolio management, books of record and risk analysis. Designed for large institutions, this expensive and cumbersome software is a drain to the squeezed margins of mid-market firms. Companies like Performativ are already innovating in the space, offering a fully integrated, front-to-back wealth management operating system. Their platform seeks to consolidate all functionalities (portfolio management, CRM, risk analysis, back office) into a single solution, enabling wealth managers to deliver personalised services to their clients effectively.”

Sunday, Sep. 8th: Paul Graham wrote an article arguing that conventional advice for running large companies often harms rather than helps founders. He emphasized the importance of a "founder mode," which allows founders to engage more directly with their teams instead of relying solely on traditional management practices. Understanding and developing founder mode could lead to better outcomes for startups as they grow. - Paul Graham

“I hope in a few years founder mode will be as well understood as manager mode.”

“Whatever founder mode consists of, it's pretty clear that it's going to break the principle that the CEO should engage with the company only via his or her direct reports.”

“You tell your direct reports what to do, and it's up to them to figure out how. But you don't get involved in the details of what they do. That would be micromanaging them, which is bad. Hire good people and give them room to do their jobs.”

Monday, Sep. 9th: Hadyen Capital wrote about AppLovin it its latest investor update. AppLovin offers marketing, monetization, and analytics solutions to mobile app developers to boost user engagement and revenue. - Hayden Capital

“Applovin is an advertising network for mobile apps (in particular, casual mobile games). Essentially, they are a market-maker for those looking to buy and sell ads – helping apps acquire users and monetize themselves, in an extremely competitive industry.”

“The company facilitates over $10 billion dollars of volume annually for its mobile gaming clients, and is expected to make $4.4BN in revenue, $2.5BN in EBITDA, and $1.8BN in Free Cash Flow this year.”

“This is a two-sided market, and might be easiest thought of like a real estate transaction. AppDiscovery is the “buyer’s broker” and the Max mediation platform is the “seller’s broker”. They are one of the largest players on both the “buyer’s” side (3rd largest ad network, behind Google and Meta) and the “seller’s” side (~60 - 70% market share). Applovin takes a cut in the process, typically 20 - 30% of the total ad spend with the rest going to the publisher.”

“This is why an effective ad network is extremely important to the mobile gaming ecosystem. Monetizing quickly & then pushing users to the next game is vital to the casual game business model. It’s possible that without effective ad networks like Applovin, the entire genre of free-toplay games might not exist.”

“There have been a few developments in the past few years though, that make this company especially exciting. Apple rolling out ATT, Unity’s missteps, and Applovin’s improved AI engine have all been enormous tailwinds.”

“Applovin shrewdly recognized the need to “own” first-party data early on, and spent ~$1 billion to acquire or partner with gaming studios, starting in 201811. These studios have a combined ~200 games, which provide data on over 200 million users to Applovin’s advertising engine, that is outside the confines of ATT.”

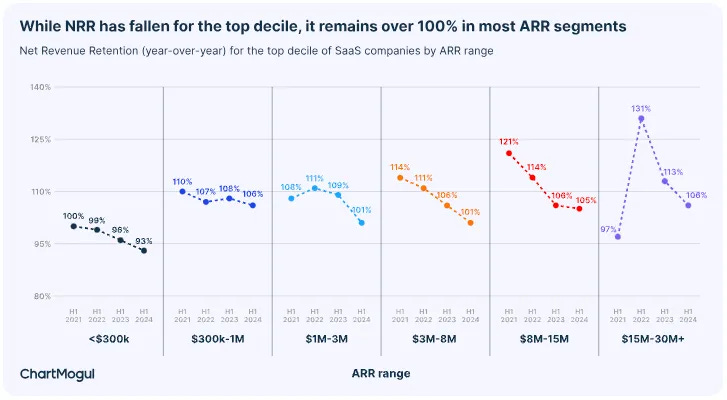

Tuesday, Sep. 10th: SaaS companies need to focus on NDR for sustainable growth in 2024, as expansion now accounts for a larger portion of growth. Achieving an NRR of over 100% is becoming more difficult, but it remains crucial for driving higher growth rates. Companies with strong NRR tend to grow faster, highlighting the importance of customer retention and finding the right customers. - ChartMogul

“Retention is now critical for long-term, sustainable growth. In 2024, companies with $15M-30M+ ARR are seeing 40% of their growth driven by expansion, compared to 30% in early 2021, when growth peaked.”

“To succeed today SaaS companies must focus on capital efficient growth. Best-in-class SaaS companies now focus on retention and expansion, and mature companies are shifting their growth strategies to prioritize existing customers.”

“During the first half of 2021, companies across all ARR segments reached their highest growth peak. They did this by acquiring new customers. But over the past three years, the way companies grew has relied much more on expansion. Bigger companies with larger workforces and customer bases naturally tend to focus more on retention. But today, not only is retention a focus, it’s a growth driver. Expansion now contributes up to 40% of growth for companies with $15M-30M+ ARR.”

Wednesday, Sep. 11th: Rick Zullo at Equal Ventures wrote about the potential uniformisation and decline of VC firms. Concentration of capital among a few large firms is stifling innovation and diversity within the industry. Many emerging managers are pressured to imitate established firms, creating homogeneity. It could lead to a “VC extinction event” where only mega-firms survive, similar to asset managers like BlackRock. To avoid this, stakeholders must embrace more diverse strategies and thinking to prevent the industry’s collapse. - Rick Zullo

“The majority of the LP landscape remains focused on these big names and they are heavily incentivized to do so.”

“When LPs do invest in a new emergent fund, their preference is to back spin outs of these large organizations or those with deep ties to those firms. I personally believe this is misguided (most of the best firms from the last 2 decades (e.x. USV, First Round, Founder Collective, IA Capital, Forerunner, Emergence, etc.) were not spin outs from large Bay Area venture shops), but also creates an insular feedback loop to reinforce the mega fund machine.” “LP preference for this pattern has incentivized the landscape of emerging managers, pressuring managers to look more and more like miniature copies of these firms.”

“When a small subset of funds force the industry to uniform, consensus investing, then I think we’ve lost the essential nature of VC. At that point, smaller firms are just outsourced resources, not independent investors.”

“These incentives may yield a terminal state where venture capital ceases to exist in its current form - one where our industry becomes so uniform in feeding the mega fund machine (making those firms even bigger and these incentive structures stronger) that our industry ultimately looks more like Blackrock than Benchmark.”

“My fear is that we will see an extinction level event to the venture industry if left unaddressed.”

Thursday, Sep. 12th: I listened to a Colossus’ podcast episode on AI with Gavin Baker who is managing partner and CIO at Atreides Management. - Colossus, Gavin Baker

Reaching AGI is seen as the ultimate race between tech giants which are not focused on ROI at the moment. “The people who actually control these companies…believe they’re in a race to create a Digital God…if they lose that race, losing the race is an existential threat to the company.” “Mark Zuckerberg, Satya and Sundar just told you in different ways, we are not even thinking about ROI.”

GPT models may not stay commodities forever. “Once we get to GPT-7 or 8 that literally costs $500 billion to train, I don’t think they’re going to stay commodities.”

Multiple competing LLMs are crucial for humanity. “It is supremely important for humans that we do not end up in a world where there is just one dominant model. That is the most dystopian future I can imagine.”

“ROI on AI has been positive thus far. This is undeniable. ROIC at the largest spenders on AI has gone up significantly since they ramped their datacenter/GPU capex spend last year. Most of the ROI on AI thus far has come from improved advertising targeting and creative leading to higher ROAS for customers.

“The only way to generate ROI on a model is to have unique, valuable data and internet scale distribution. Absent unique data and internet scale distribution, these models are essentially commodities today (might change as scale becomes a barrier) which is why it was relatively easy for Meta to outsource Llama - the value will mostly come from the data, not the model at least for now.”

Robotics combined with AI will redefine the labor market. “Putting LLMs into these humanoid robots I think is going to be so transformational for the world and make a lot of blue-collar labor optional.”

Synthetic data is allowing AI models to continue scaling despite data limitations. While no one fully understands why synthetic data works, it is already proving to be a solution to a potential bottleneck in AI training.

AI tools are replacing repetitive tasks in industries like research, advertising, and software development. Even simple AI wrappers around LLMs are already transforming workflows, making workers significantly more efficient.

Friday, Sep. 13th: I listened to Josh Wolfe on the Logan Bartlett Show. - Logan Bartlett

“I'm competitive. I want to be smarter than the next person. I want to know something that somebody else doesn't know. That is an addictive feeling.” “I need to know more than you about this particular topic.”

“Today, lot of people are working on all the different variations of GLP1. It’s a crowded space. I would not be very bullish on the incremental advance there because it has to be so much better that it's improbable to really get the attention to get the investor demand, to get the media attention and to get the desire for people to want to work for that company.”

“If look at all the risks of a company (financing risk, market risk, technology risk, people risk, competition risk), every risk that we can identify and kill with time or talent or money thrown at it should be rewarded. A later investor that comes should pay a much higher price and demand a lower quantum of return. I should get rewarded in the form of value creation.”

“We want people to agree with us just later. The contrarian aspect [of my investing style] is just being earlier.”

“I just start reading scientific papers [on a topic I’m interested about] and my goal is to be incrementally smarter in every conversation so that by the sixth or seventh person I'm talking to [on this topic, he is impressed by knowledge]. That to me is sort of like a competitive, obsessive thing. From nuclear waste, to geopolitical controversy in the Sahel and Maghreb, to aerospace and defense, to satellite and manufacturing. I need to know more than the next person about whatever that new thing.”

“I get obsessed about something typically on like a six, eight week basis.” Sometimes, I get obsessed with something and it just sits there. Sometimes it's something where I'm like, this is inevitable. But it might just take forever and I'm waiting for the entrepreneur, or maybe there's a technological breakthrough.”

Saturday, Sep. 14th: Nichole Wischoff shared the fundraising deck she used to raise its $50m third fund. - Nichole Wischoff

Sunday, Sep. 15th: Chris Paik at Pace Capital wrote an essay on being intentional in understanding one’s own judgment and biases while investing. - Christ Paik

Investing involves two areas of study: the outward-facing world of evaluating businesses and markets, and the inward-facing world of understanding one’s own judgment and biases. While most investors focus on the former, true success often comes from studying oneself.

“In the world of investing, there are two areas of study. The first world is outward-facing—the study of what makes a good investment opportunity, a good business, fundamentals, frameworks, etc. The second world of study is inward-facing—the study of one’s own judgment, mental biases, where intuition is perfectly right and where it is perfectly wrong, motivators that skew incentives, and our natural tendency to want to outsmart ourselves.”

“Objectively, you are capable of having 100% conviction in something and also being 100% wrong. Sitting with that as a truth about one’s judgment, while uncomfortable, ought to prompt us to be hungry to debug ourselves (rather than dismiss it to protect our ego). If we do this well enough, we can study ourselves at a distance, like an anthropologist, and come to valuable conclusions and workarounds.”

“If I don’t do the work because I’m too lazy, that means I don’t feel strongly enough about the opportunity and we should not make the investment.”

“I should pursue investment opportunities alone because if I have a team that does the work, we will end up with a memo and have to make an investment decision but I won’t know how convicted I am in the opportunity.”

“The challenge with this inward-facing world of study is that it is fractal. We constantly try to outsmart ourselves once we know how we operate. It is a constant cat and mouse game between our rational self and meta-analysis, each vying for superposition. But it’s also where the most alpha as investors comes from and is wholly unique to each individual.”

Monday, Sep. 16th: Jordan Nel at Hummingbird wrote about the growing gap between large venture funds and small/emerging funds. He argues that small funds are the only ones which are non-consensus and high alpha when larger funds are facing challenges due to inflated valuations and over-reliance on consensus deals. - Jordan Nel

“Big funds are forced to look for deals which can both accommodate large checks and have potentially enormous outcomes. So they invest in companies with pedigreed teams, obvious markets, and which could be enormous, but which also raise big dilutive rounds and have a lot of expectation baked into their valuations.”

“Venture is meant to be the riskiest asset class, where conviction matters the most. With the big funds now facing deployment pressure, doing more expensive deals, and having trained a generation of investors to source and sell rather than pick, the field is more open than most think.”

“Investing with emerging managers requires parsing their real unique insights (for instance: why did they invest in this specific team and not their competitor with the same narrative and more pedigree?) and getting comfortable that they’ll nail the transitory period of non-consensus to consensus.”

Tuesday, Sep. 17th: Tanay Jaipuria compared OpenAI and Anthropic’s revenues. - Tanay

OpenAI is forecasted to generate $3.7bn in revenues in 2024 with 73% coming from ChatGPT subscriptions and 27% coming from its API. OpenAI will burn $5bn in 2024. Its API business is operating at a 50% gross margin meaning that the losses are mostly coming from its ChatGPT business and operating costs.

Anthropic is forecasted to reach $1bn in annualised run rate revenues at the end of the year (10x YoY growth) with 60% coming from its API distributed via Amazon, 25% from its own API and 15% from its Claude subscription. Anthropic will burn $2bn in 2024.

“Distribution remains king, even for developer products. Anthropic generating ~65% of their revenue from their third party API highlights how important distribution is.”

Wednesday, Sep. 18th: AI start-ups are generating revenue faster than previous tech companies with many reaching $1m within 11 months, compared to 15 months for SaaS companies. AI firms achieving $30 million in revenue did so in 20 months, 5x times faster than SaaS counterparts. - FT

Thursday, Sep. 19th: I listened to a BG2’s podcast episode with Bill Gurley and Brad Gerstner. - BG2

OpenAI's early lead in consumer AI is fuelled by network effects and strategic advantages. OpenAI has a dominant position in the consumer AI market because it has network effects, where more users generate more data, leading to better models and attracting even more users.

A combination of factors is contributing to the decrease in initial public offerings, including regulatory hurdles for going public, increased access to liquidity through secondary markets, and evolving motivations for both founders and investors.

There is concern about the potentially detrimental effects of too much capital flowing into the VC market. This "overfeeding" of companies could lead to operational inefficiencies, unsustainable burn rates, and hinder innovation rather than foster it.

People are enthusiastic about the potential for advanced AI voice applications. Advanced voice applications are likely to be very “reinforcing” for users, leading them to engage with AI products more and more. This could give companies that develop these applications a significant advantage over their competitors. However, there are some concerns that the high cost of running advanced AI voice applications, particularly when it comes to inference, may prevent these companies from offering them at an affordable price. It is still unclear what the best business model is for advanced AI voice applications, and how much consumers would be willing to pay for them. Some have suggested that consumers would be willing to pay a lot for a “perfect” AI assistant, but no such product exists yet.

The demand for AI inference is increasing and is expected to increase dramatically in the future. The increasing demand for inference is being driven by new AI models that rely heavily on inference, such as those that use inference-time reasoning (a technique where the model performs reasoning steps during the process of generating a response to a prompt, rather than just generating a response in a single step). OpenAI’s new AI model, Strawberry, uses inference-time reasoning and requires 100x more inference than models that use single-shot prompting. In the future, there will be much more machine-to-machine communication, which will also drive up the demand for inference

The increasing demand for AI inference is creating constraints for companies developing AI products and services. This is because inference requires a lot of computational power, which is expensive. This is reflected in the fact that companies like OpenAI and Microsoft are reportedly “inference constrained,” and that the release of OpenAI’s new voice model has been slow, perhaps because it is very expensive to run.

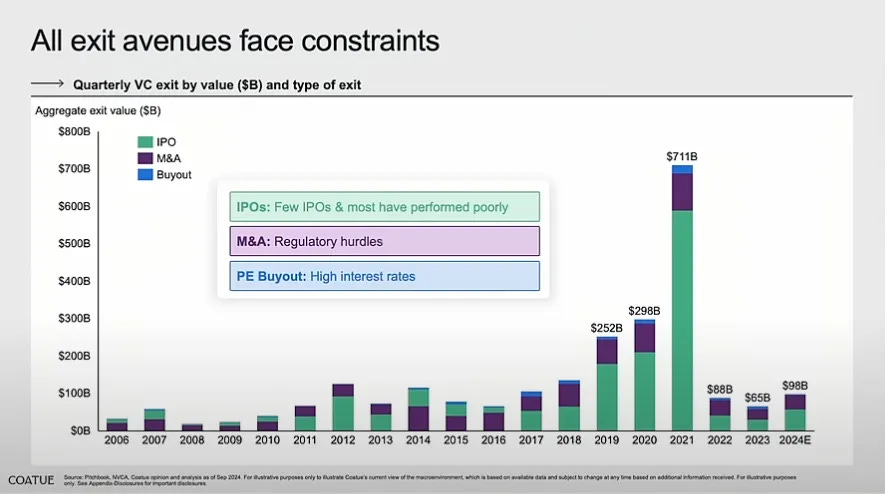

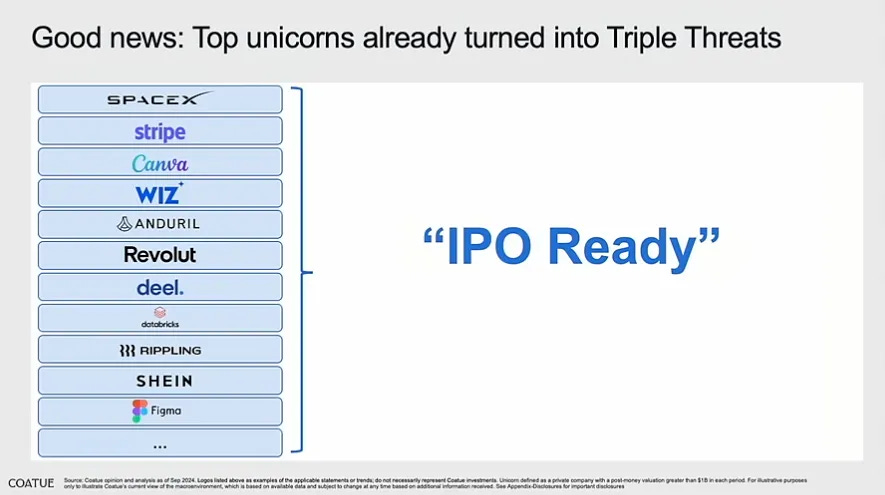

Friday, Sep. 20th: Thomas Laffont, GP at Coatue, talked about the unicorn economy. - All-In Summit

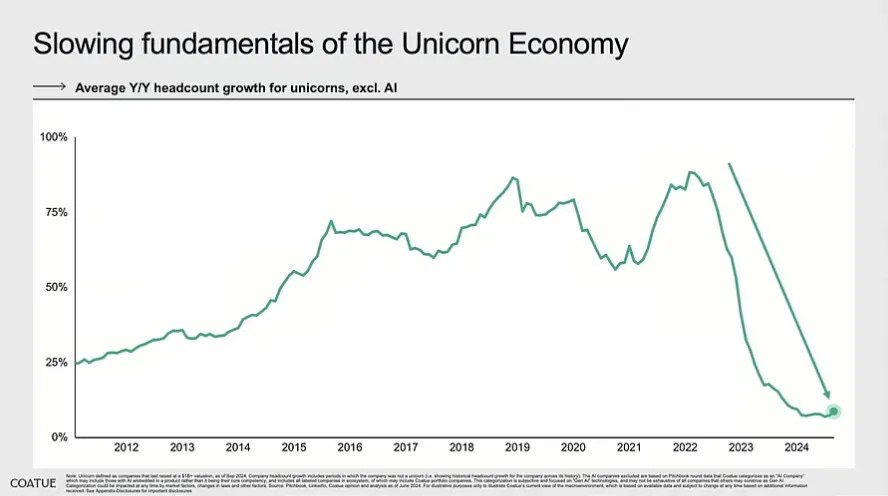

Funding remains healthy but exits are challenging. “Funding is still actually pretty healthy… but if we look at exits… we’re at pre-levels without really any substantial increases.”

Traditional exit options are limited. “The three kind of traditional exits for companies are blocked today… fewer buyouts, fewer IPOs, and M&A is restricted.”

VC distributions are at an all-time low. “Distributions from VCs back to their investors are essentially at all-time lows… almost back to Global Financial Crisis levels.”

Private companies are struggling to raise funds.“ In today’s market, it takes longer to raise funds… and down rounds and bridge rounds make up almost 63% of total rounds.”

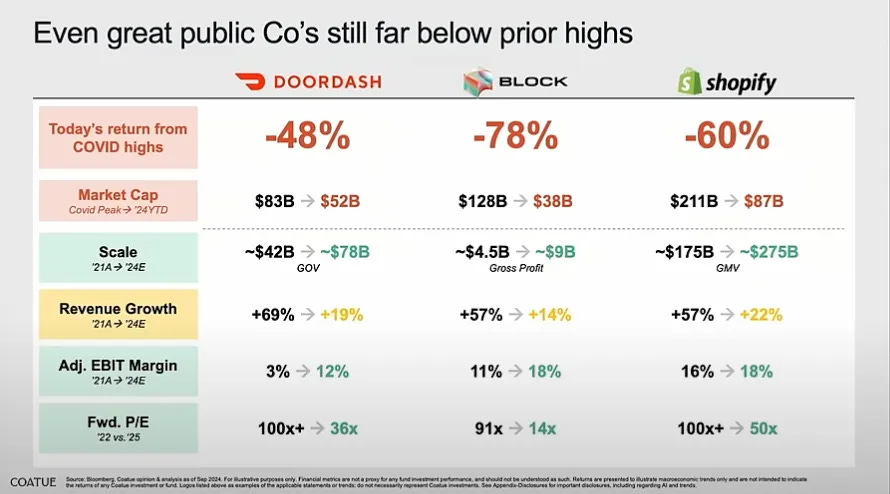

Unprofitable tech and SaaS companies are underperforming in the public market. “Unprofitable Tech and SaaS companies have been hit the hardest and have recovered the least since 2019.”

Public markets are demanding profitability, growth, and scale. “What the public market is telling you is that we want it all—we want you to be profitable, to grow, and to have scale.”

Restricting big companies from acquiring small ones reduces the value of small companies, as investors downgrade their worth. More critically, it removes the pressure on large companies to act quickly, since they no longer need to worry about competitors acquiring those small companies due to regulatory barriers.

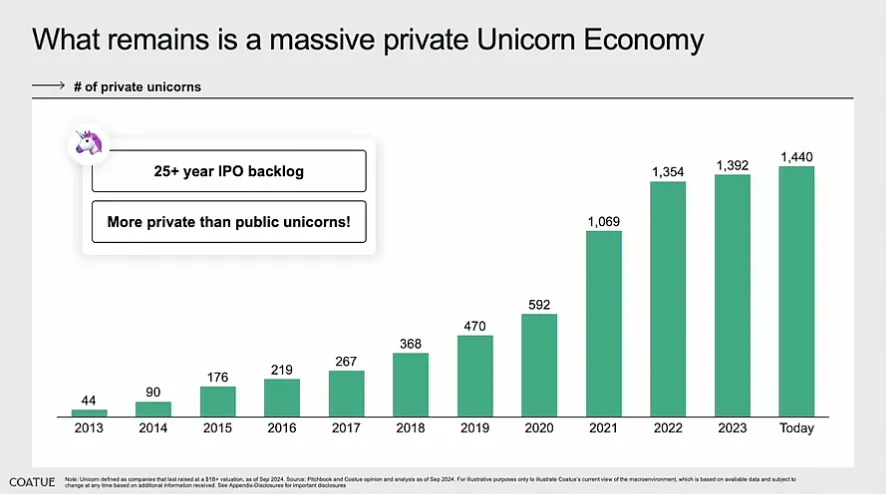

1,440 private companies are unicorns. There is more private companies in tech that are worth more $1bn than public ones.

Many unicorns in recent cohorts have yet to be re-priced and may never secure additional capital.

“Of all of the IPOs since 2020, if you look at the value created or destroyed from their IPO price you can see it as as a cohort we've destroyed almost $225bn in market cap offset by the value creation of $84bn - so net negative as a cohort.”

“Technology is the great resetter of the business world. It can take an incredible company and turn it into dust (e.g. Nokia). The reason why I'm still an incredible optimist about our industry and about technology is because technology is still the most disruptive force.”

Saturday, Sep. 21st: Harry interviewed Eric Vishria. He is a general partner at Benchmark who invested into companies including Cerebras, Confluence, Amplitude and Benchling. - 20VC

“I'm not a sector specialist and nobody at Benchmark is. In venture, you have to be moving. You have to be looking at new stuff because that's where the disruption's happening.”

Even if you’re not a sector specialist, you can always evaluate three things in all companies: (i) is the entrepreneur extraordinary? (ii) has the entrepreneur a very interesting and unique insight? (iii) can the addressed market sustain a big company?

“I think that a lot of venture capital tend to follow a very spreadsheety, investor bankery type which does not work.”

“In a hyper competitive market, you have to really believe in the entrepreneur, in the heart of the insight and his execution ability.”

“We almost never think about like fund cycle or fund timing. We almost never think about portfolio construction. Finding good investment ideas is hard enough. Finding great companies is hard enough. Let's not over constrain it.”

Sunday, Sep. 22nd: Cerebras filed to go public. It’s an AI chipmaker. It aims to raise $1bn at a $7-8bn valuation. Cerebras is not profitable and its business heavily relies on one customer, G42, an Abu Dhabi-based AI company, accounting for over 80% of its revenue. - S1, Matt Turck

“For the most part, public market investors have had very limited options to play the Generative AI wave: essentially NVIDIA, and indirect bets on AI through the hyperscalers.”

“Timing is everything. AI is hot and Cerebras plays a very strategic part of the AI market (the well-documented AI infrastructure build, happening right now), at a very sensitive time (the even more well-documented GPU shortage, which may or may not last).”

“Return of the small IPO? While it’s growing very fast, the company is reasonably early in revenue – $136 million in revenue in the first six months of 2024, and $78.7M for the fully year 2023.”

“A big part of NVIDIA’s competitive advantage lies not just in the hardware, but also in the software – CUDA, both a computing platform and programming model enabling developers to program NVIDIA GPUs directly.”

“87% of revenue in the first half of this year is from just one customer, G42 in the UAE”

“Cerebras provides chips for high-performance computing, specifically for use in training & inferencing LLMs and other models. The company also provides a software layer (CSoft) closely integrated with their chips”

“Cerebras has raised $715M in venture capital, most recently at a $4.1B valuation. A number of well-known VC investors on the cap table, with Alpha Wave, Altimeter, Benchmark, Coatue, Eclipse Ventures and Foundation Capital all holding >5% of outstanding shares”

“We design processors for AI training and inference. We build AI systems to power, cool, and feed the processors data. We develop software to link these systems together into industry-leading supercomputers that are simple to use, even for the most complicated AI work, using familiar ML frameworks like PyTorch.”

“AI compute is comprised of training and inference. For training, many of our customers have achieved over 10 times faster training time-to-solution compared to leading 8-way GPU systems of the same generation and have produced their own state-of-the-art models. For inference, we deliver over 10 times faster output generation speeds than GPU-based solutions from top CSPs, as benchmarked on leading open-source models.”

“Our proprietary software platform, CSoft, is core to our solution and provides intuitive usability and improved developer productivity. CSoft seamlessly integrates with industry-standard ML frameworks like PyTorch and with popular software development tools, allowing developers to easily migrate to the Cerebras platform. CSoft eliminates the need for low-level programming in CUDA, or other hardware-specific languages.”

“Our wafer-scale chip architecture eliminates the need for distributed computing. This enables AI developers to use up to 97% less code when working with large models on our platform compared to on clusters of GPUs and greatly accelerates the speed of AI model development for larger-scale models.”

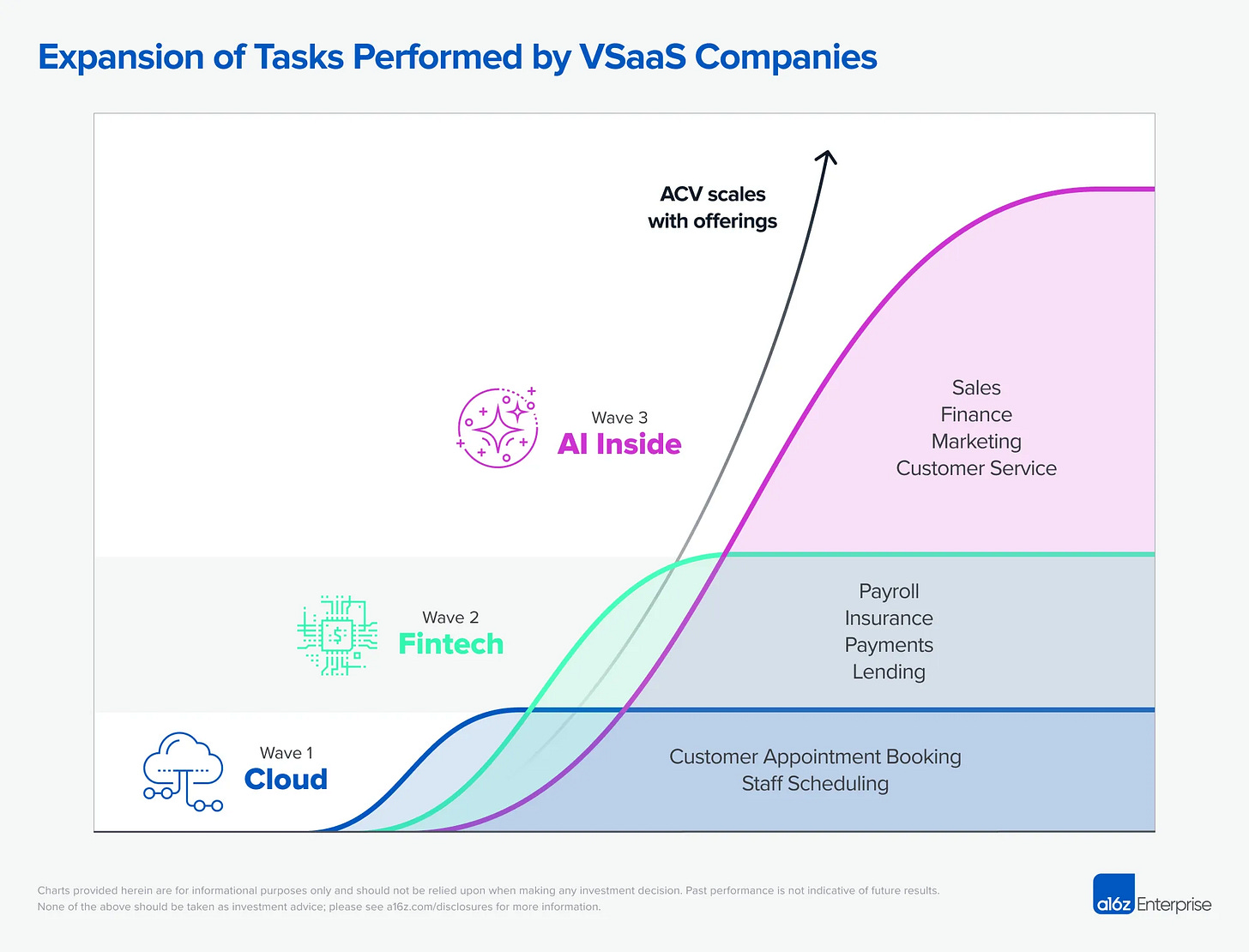

Monday, Sep. 23rd: a16z published a post discussing the impact of AI on vertical SaaS companies. - Angela Strange

“With AI, many customers of VSaaS can dramatically reduce internal and external labor spend on sales, marketing, customer service, operations, and finance.”

“With the right solution, many businesses consuming VSaaS can dramatically reduce internal and external labor spend on sales, marketing, customer service, operations, and finance. This should further increase the take rate of VSaaS companies by an additional 2-10x.”

“AI is unlocking a new era for vertical SaaS. In functions like marketing, sales, customer service, and finance, AI will augment, automate away, or in some cases, replace, many of the rote tasks currently performed by people, allowing VSaaS companies to offer even more with their software.”

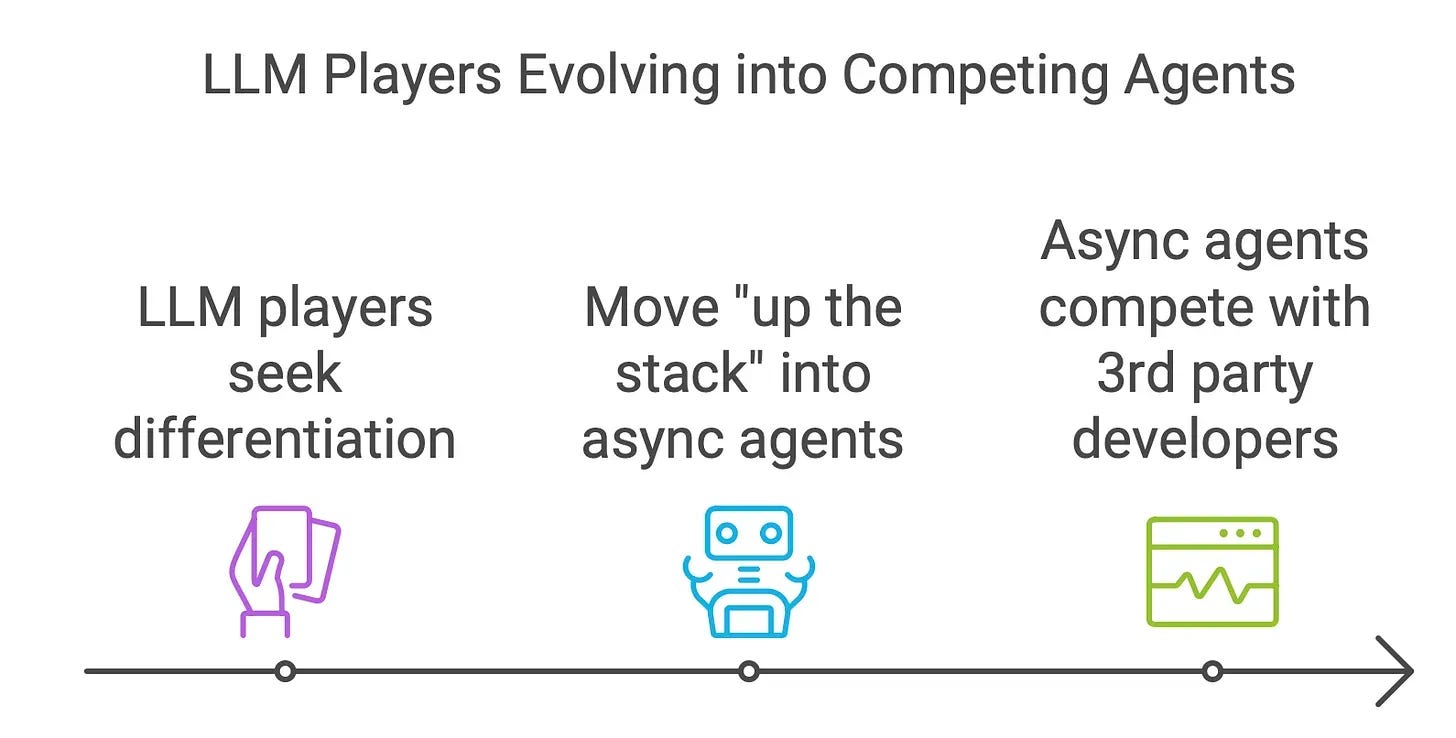

Tuesday, Sep. 24th: Sarah Tavel at Benchmark discussed the potential impact of LLM players on B2B AI startups if these former players start to move up the stack. - Sarah Tavel

“It seems inevitable that as the underlying foundation models become more powerful, the LLM players will seek to justify the enormous investment that has gone into training their models by moving "up the stack", and evolve from an API or chat interface, to async agents.”

“Right now, OpenAI/Anthropic/et al have an API consumable by almost anyone, but it's not hard to imagine a world in which they begin to compete with some of their API developers.”

“I'd guess the async coding agents are most vulnerable to this potential in the near term given the seemingly unbounded economic value of owning this use case, and the already existing product market fit LLMs have found with coding.”

“Why buy a specialized AI application that lets you automate internal IT ticket response, when the foundation model companies offer an AI agent that if you point it in the right direction with a job spec, will read your knowledge base, build its own integrations to connect to your existing systems of record (e.g., Jira), and then handle all the internal requests automatically?”

Sarah highlights 3 paths for startups to create defensibility: (i) build a network effect, (ii) capture proprietary data/hard to access data, (iii) land grab in an overlooked vertical.

Wednesday, Sep. 25th: Paul Graham wrote on the debate about whether it's a good idea to follow your passion. Following your passion doesn’t always guarantee financial success but can be crucial for creating extraordinary work or launching startups. The best startup ideas often arise from personal interest. - Paul Graham

“If your main goal is to make money, you can't usually afford to work on what interests you the most. People pay you for doing what they want, not what you want. But there's an obvious exception: when you both want the same thing.”

“Many if not most of the biggest startups began as projects the founders were doing for fun. Apple, Google, and Facebook all began that way. Why is this pattern so common? Because the best ideas tend to be such outliers that you'd overlook them if you were consciously looking for ways to make money.”

“If you don't need to make much, you can work on whatever you're most interested in; if you want to become moderately rich, you can't usually afford to; but if you want to become super rich, and you're young and good at technology, working on what you're most interested in becomes a good idea again.”

“You don't necessarily need a job doing x in order to work on x; often you can just start doing it in some form yourself.”

Thursday, Sep. 26th: CRV will return the remaining $275m to be invested to its LPs from its $500m growth arguing that there is a poor risk-reward ratio at growth stages with most companies being overvalued compared to their potential. CRV will refocus on its core business which was deploying a $1bn early stage fund. - NYT, Techcrunch

“The reason was the maths no longer work. In order to generate the kind of returns that CRV’s investors expected, many start-ups — far more than ever before — would have to wind up being worth $10bn or more. “The data just doesn’t support that,” said Saar Gur, a partner at the firm. “There aren’t many really big foundational companies and big outcomes.””

“This is the second time the firm has cut its fund size. In 2002, after the dot-com bubble broke, CRV slashed its $1.2 billion fund to just $450 million.”

“Some limited partners have been frustrated with the expansion of venture capital funds in recent years.”

“Bigger funds also tend to have middling investment performance. Smaller venture capital funds have historically minted the highest returns.”

Friday, Sep. 27th: Molten acquired a 97% stake in Connect’ first fund for about £18.6m. Molten made several acquisitions of LP positions in the past including in Seedcamp Funds I, II, and III, as well as Earlybird DWES Fund IV and Earlybird Digital East Fund I. This acquisition illustrates the growing need for GPs to generate DPI for their investors as well as the emerging liquidity options for GPs that can sell their funds without having realised exits. - Molten

“This strategic move is part of Molten's ongoing commitment to acquiring LP positions in funds made up of later stage portfolios with strong potential for near-term realisation.”

“85% of the value of the portfolio is driven by two standout companies: Typeform, a leading cloud-based SaaS platform for survey data collection, and Soldo, an expense management platform.”

Saturday, Sep. 28th: Lightspeed published a deep-dive on conversational AI. - Lightspeed

“Commercial voice applications have evolved dramatically over the last 50 years.

The first interactive voice response (IVR) system appeared in the 1970s, requiring end users to use a keypad to navigate through voice prompts.

In the last two decades, we’ve seen this traditional, touch-tone model give way to something smarter: voice-driven phone trees, allowing customers to use natural language commands instead of just pressing buttons.

Now, we are entering the era of LLM-based systems, where end users don’t just talk to software, but have conversations with it. These systems understand the nuances and context just like a human would.”

“In the short term, the most successful voice companies will be focused on vertical apps in fields like healthcare and hospitality, as well as apps designed for relatively simple tasks like scheduling. Eventually, however, these new voice apps are poised to wedge their way into broader SaaS platforms, significantly expanding the total addressable market.”

“AI-driven voice apps rely on three basic reference architectures devoted to ingesting natural language, interpreting it, and generating an intelligent response:

Speech to Text (STT) ingestion. Capturing spoken words and translating them into text.

Text-to-Text (TTT) reasoning. Utilising an LLM to tokenise the text transcription and formulate a written response.

Text-to-Speech (TTS) generation. Translating that written response into spoken language.”

There are 3 key challenges for voice AI startups: (i) having humans in the loop when AI alone is not enough, (ii) providing near real-time performance while lowering latency and cost, (iii) finding the right GTM strategy to grow at an exponential pace.

Sunday, Sep. 29th: I read an interview with Stripe’s founder John Collison on Ireland. - The Currency

“John Collison retains deep roots in the country of his birth. He has funded a think-tank, backed university programmes, renovated a stately pile in the midlands, and even bought an airport.”

“The general worry I have is, is there some complacency that we need to be quite paranoid about? Lots of our successes came out of quite radical ideas.”

“Collison argues that much of what the company has achieved has taken longer than initially planned. “Because we set the business up to be self-sustaining, to be in a good spot, to not be reliant upon investor capital, it is okay if certain things take longer than we anticipated.”

“In Ireland, we are blown back by the fire hose of demand and it is the supply of housing, of infrastructure, of all these things where the issues are.”

“When you look at a lot of the housing debates, there is an implicit, sometimes explicit, concern about overbuilding despite the fact that overbuilding is the very last problem we should be worried about right now.”

Monday, Sep. 30th: Duolingo is integrating AI into its platform with the vision to replace human tutoring with AI driven tools like conversational characters or interactive lessons. - Forbes

“Late last year, Duolingo decided not to renew the contracts of about 10% of its contracted workforce who did translations and lesson writing, instead opting to use AI for those tasks in some cases.”

“Duolingo’s AI may put one-on-one human tutors out of business. I understand that. But I think net-net it is better if everybody has access to one.”

“AI could unlock new possibilities for learning, bringing high-quality education to the masses. He thinks languages can help lift people out of poverty, noting that, for non-native speakers, learning English instantly broadens a person’s earning potential and opens up a whole new world of jobs.”

“Duolingo unveiled its first step in that direction: An interactive feature in which users partake in “video calls” with Lily, one of Duolingo’s beloved mascots — a purple-haired, sarcastic, cartoon woman. Chatting with Lily allows people to practice conversing in other languages as if FaceTiming with an AI friend, with dialogue generated by OpenAI’s GPT-4o model. It’s part of a $30 a month subscription tier, called Duolingo Max, which the company debuted last year for its premium AI features, including one that tells people why they answered a question wrong during a lesson. Another new AI addition is a mini-game called Adventures, which puts users in interactive situations to practice their language skills, like ordering a coffee from a cafe or getting their passport checked.”

“In addition to its AI tutor efforts, the company has made another big AI investment in its Duolingo English Test (DET), the app’s version of TOEFL, or the Test Of English as a Foreign Language, which is widely used to certify English proficiency for university admissions or visa applications. The DET, which costs $59 and first launched in 2016, gained traction during the pandemic because it could be taken remotely. Duolingo now uses AI for every element of the DET exam, von Ahn said, from generating the questions to making sure people don’t cheat. One security feature, for example, uses facial recognition to make sure a test taker isn’t looking offscreen at notes. Right now, the test accounts for 10% of Duolingo revenue, and von Ahn wants it to become a bigger part of the pie as the company focuses increasingly on non-English speaking users.”

Thanks to Julia for the feedback! 🦒 Thanks for reading! See you next week for another issue! 👋