Hi, it’s Alexandre. I’m investing in seed & series A European vertical solutions (vSol) which are industry specific solutions aiming to become industry OS and combining dynamics from SaaS, marketplaces and fintechs. Overlooked is a weekly newsletter about venture capital and vSol. Today, I’m sharing the insightful tech news of August.

Before jumping into the newsletter, I’m thrilled to tell you that I’ve joined 20VC as Partner, where I’ll be mainly focusing on our Series A investments.

Joining Harry, Kieran and Paul was an easy decision.

At 20VC, we put content at the core of everything we do - this resonates with what I’ve been doing for years with my newsletter on vertical SaaS, Overlooked, and with the French Tech Ecosystem report I publish every year.

At 20VC, we are building a venture platform differentiated through its content (22m monthly listeners) and network (2,800 previous guests).

Harry, Kieran and Paul are incredible human beings and I already know it’s going to be an opportunity of a lifetime to work with them. I felt at home as soon as I met them, and we share similar core values that I know will make us a great team: putting founders first, being humble, working hard and playing as a team.

At 20VC, we aim to back the most ambitious founders in North America and Europe from Pre-Seed to Series A. If that's you, ping me! As a reader of the newsletter, it means that you can expect bigger content projects going forward!

Thursday, Aug. 1st: I listened CCC’s Q2-2024 earnings call. CCC is a vertical SaaS selling solutions to both insurers and car repair shops to digitise the car repair process. - CCC

CCC ONE generates $400m in annual revenues. It’s CCC’s cloud based estimating and shop management for car repair shops serving both single store an multi store operators. It competes directly with VC-backed players like ShopMonkey or Autoleap.

Customers consolidating their toolstack on CCC. “We completed the successful, on schedule roll-out of our full suite of auto physical damage or “APD” solutions for a top-20 insurer as they transitioned from multiple vendors to the CCC platform.”

Reaching 30k car repair shops in the US. “We have also added over 600 new repair facilities so far in 2024. This growth pushed us across a major milestone: we now have over 30,000 repair facilities on the CCC platform.”

“We believe that our investments over the last three years have created an exciting pipeline of new solutions with greater breadth and depth than at any time in our history.

Breaking down revenue growth into 3 drivers: (i) cross-sell/upsell from mature products, (ii) new logos, (iii) emerging solutions. “Total revenue in the second quarter was $232.6 million, up 10% from the prior-year period.

Approximately 7 points of our growth in Q2 was driven by cross-sell, upsell, and adoption of our solutions across our client base, including repair shop upgrades, continued adoption of our digital solutions, and the ongoing strength in Casualty and Parts.

Approximately 3 points of growth came from our new logos – mostly our repair facilities and parts suppliers.

About 1 point of growth in Q2 came from our Emerging Solutions, mainly Diagnostics, Estimate – STP and the new, adjacent Casualty solutions.”

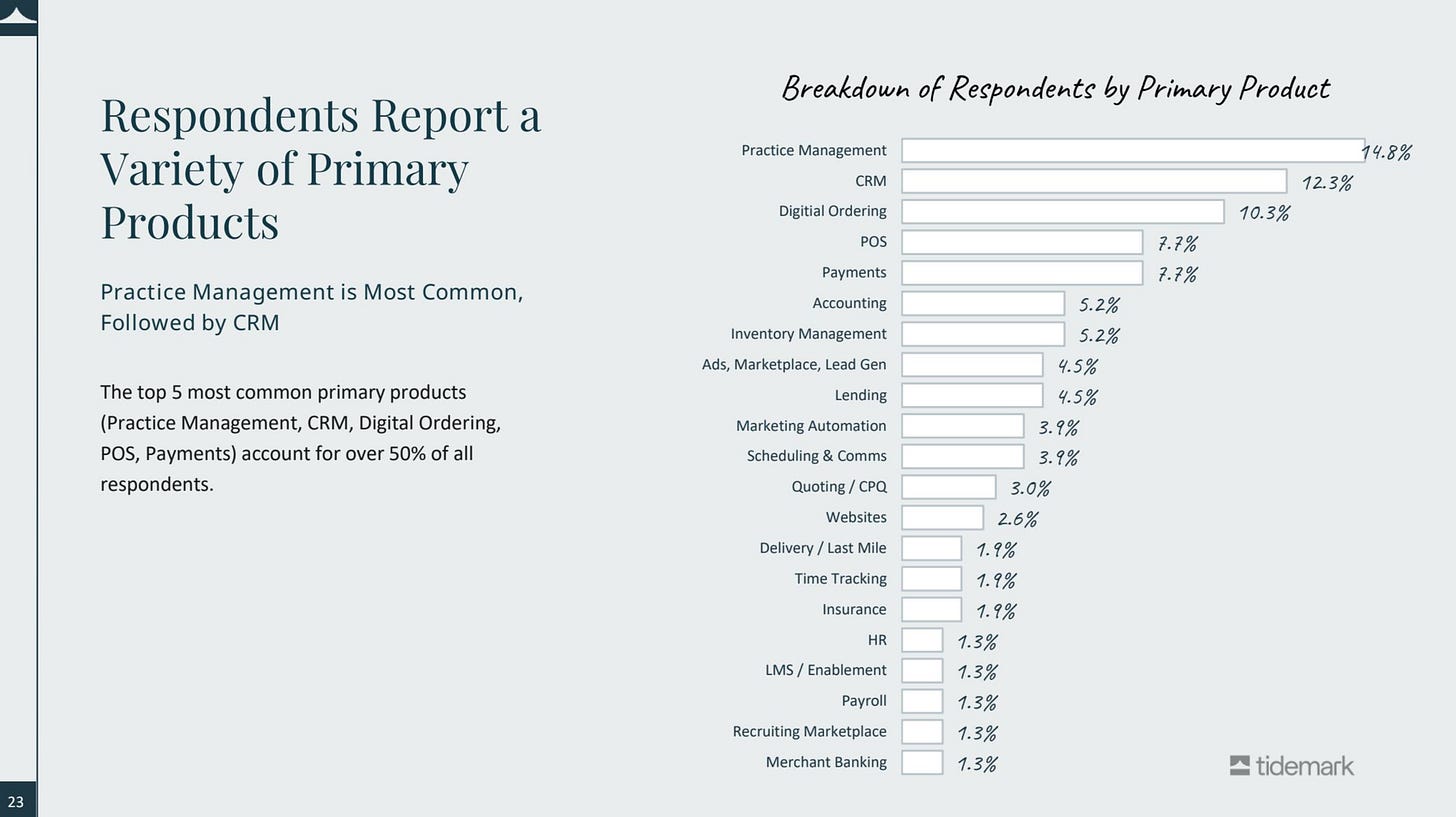

Friday, Aug. 2nd: Tidemark published a benchmark report on vertical SaaS. Congrats to Michael Tan for his outstanding work on this project! - Tidemark

“Nearly 40% of respondents offered a fintech product. Fintech products can address key merchant needs while driving ARPA for Vertical SaaS Vendors. For the survey respondents, Payments at 30% was by far the most common fintech expansion product. This was followed by Lending at 11% and Payroll at 9%.”

When integrated into vertical SaaS platforms, payments exhibit the highest attach rate at 50%, outperforming other financial services such as lending (32%) and payroll (10%).

31% of respondents have launched an AI product and 39% more are planning to launch one in the next 12 months. In terms of pricing, 24% of respondents with an Al product are offering it completely free - while 13% are including it as a premium feature for their premium pricing tier. For direct monetization structures, 46% are doing so via SaaS subscription, 11% via transaction fees, and 7% as a per employee per month structure.

Saturday, Aug. 3rd: Lux published its Q2-2024’s letter to LPs. - Lux Capital

Lux emphasizes its focus on investing in “rebel scientists” who challenge conventional thinking. These visionaries are seen as critical to advancing human knowledge and technology, driving innovation in areas like AI, biotechnology, and aerospace.

“No institution is more important for progress than the laboratory, the epicenter of fundamental shifts in our understanding of the world.”

“The federal share of total R&D funding went from around 67% in the 1960s to less than 20% in recent years thanks to expansive spending from the private sector, which now accounts for 75% of R&D. Notably, the private sector is doing more basic research, taking share from universities.”

While there is immense potential with AI, the sector is also experiencing significant volatility. Lux focuses on companies with defensible technology and long-term potential.

“Over the past two decades, Lux Labs has been an informal initiative that embodies Lux’s commitment to creating companies from scratch in overlooked areas of the hard sciences. We’ve been at the forefront of de novo company creation, turning research from university labs and internally developed theses into over 20 groundbreaking ventures including: Kurion (a nuclear waste treatment company that aided the cleanup of Japan’s Fukushima disaster), Kallyope (in metabolic disease and obesity), Cajal (in neurodegenerative disease), Genocea (in vaccines), Aera (in genetic medicines) and many more.”

“We expect this spin-out and start-up strategy to be repeatable for Lux as big tech companies focus on profitability and cut spending on non-core research projects.”

“Today’s AI investors are always seeking Yesterday’s Yes: people want to be invested today where they should've been investing years ago.“

“The vertiginous ascent of capital infusion into AI yields a hype-hope continuum, where short-term disappointment may yet yield long-term delight echoing the trajectory of the internet's evolution.”

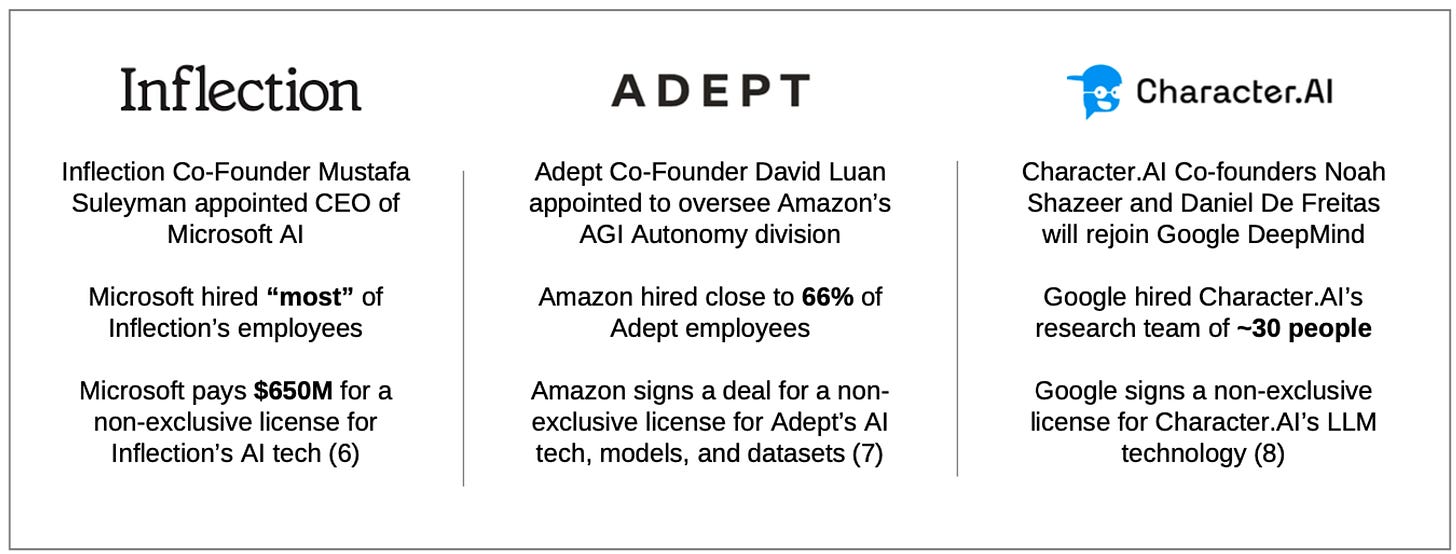

“AI isn't a novel distribution platform, which means that many of the gains will accrue to the inertia of incumbency. Take Microsoft, which is using regulatory arbitrage to control OpenAI while also skirting merger scrutiny with Inflection by licensing IP and acqui-hiring its key talent (paying out investors with a modest but not fund-making multiple).”

“Far from being a democratized asset class, VC is and will remain a rarified ecosystem where only a select cadre of firms consistently access the most promising opportunities. The vast majority of new participants engage in what amounts to a financial fool's errand. We continue to expect the extinction of as many as 30-50% of VC firms.”

Sunday, Aug. 4th: Procore published its Q2-2024 earnings. It’s a cloud based construction management software initially focused on streamlining communication among all stakeholders on a construction project. - Procore

Procore is changing its organisation to go from $1bn to $10bn in revenues with general managers in key geographical markets with more leeway to adapt the product and the go-to-market to local specificities.

“I'd like to spend some time today discussing changes that we're making to the business. This year, we are guiding to surpass $1bn in full year revenue. And while this is an important milestone for Procore, it also represents a key inflection point as we move towards becoming a multibillion revenue company.”

“I'd like to walk through some of the specific changes that we're making to our go-to-market operating model. First, we're moving from a matrix organization structure to a general manager model.” […] “General managers will have combined teams reporting into them, which should streamline engagement across the customer life cycle. The general managers roles will cover the following regions: North America, Europe, Australia, New Zealand and the Middle East, which represents our current geographic coverages. In the future, when we elect to move into new countries within these regions, the general managers of these respective regions are going to be better positioned to determine specific strategies and tactics for that particular country.” […] “This creates nimble teams that can quickly address on-the-ground dynamics as they arise. General managers will have the autonomy to respond to the local nature of construction and to tailor and customize their own motions specific to the needs of the markets that they serve.”

International revenues are growing at a faster pace than total revenues. “Total revenue in Q2 was $284 million, up 24% year-over-year, and international revenue grew 31% year-over-year.” […] “Q2 non-GAAP operating income was $50 million, representing an operating margin of 17.6%”

Procore’s Pay adoption is increasing. “Moving on to an update on Pay. Q2 represented only the second full quarter of general availability, and we continue to see strong adoption here. Given the ramp period associated with onboarding Pay, we do not expect this to be a material contributor to our financial results this year, but we are pleased with the progress we have seen thus far and believe this represents a significant opportunity to drive greater cross-sell as we further extend our platform functionality throughout the construction life cycle.”

Leveraging partners as a go-to-market channel. “It's a huge opportunity for Procore to capitalize on the opportunity for channel, both in the U.S. and globally.”

“Achieved a gross revenue retention rate of 94% in the second quarter. Number of organic customers contributing more than $100,000 of annual recurring revenue totaled 2,191 as of June 30, 2024, an increase of 20% year-over-year. Added 152 net new organic customers in the second quarter, ending with a total of 16,750 organic customers.”

Monday, Aug. 5th: I listened to Sam Altman discuss OpenAI and the future of AI on Lex Fridman’s podcast. - Lex Fridman

“I think all of these models understand something more about the world model than most of us give them credit for.”

“One thing we have to do before releasing the [Sora] system is just get it to work at a level of efficiency that will deliver the scale people are going to want from this so that I don’t want to downplay that.”

“People talk about how many jobs is AI going to do in five years. […] The way I think about it is not what percent of jobs AI will do, but what percent of tasks will AI do on over one time horizon. So if you think of all of the five-second tasks in the economy, five minute tasks, the five-hour tasks, maybe even the five-day tasks, how many of those can AI do? I think that’s a way more interesting, impactful, important question than how many jobs AI can do because it is a tool that will work at increasing levels of sophistication and over longer and longer time horizons for more and more tasks and let people operate at a higher level of abstraction.”

“I expect that the delta between [GPT] 5 and 4 will be the same as between [GPT] 4 and 3 and I think it is our job to live a few years in the future and remember that the tools we have now are going to kind of suck looking backwards at them and that’s how we make sure the future is better.”

“One thing I’ve been using [Chat GPT4] for more recently is sort of like a brainstorming partner. There’s a glimmer of something amazing in there.” “One of the other things that you can see a very small glimpse of is when [Chat GPT4] can help on longer horizon tasks, break down something in multiple steps, maybe execute some of those steps, search the internet, write code, whatever, put that together. When that works, which is not very often, it’s very magical.”

“In some sense, we did have to do two things. We had to invent the underlying technology and then we had to figure out how to make it into a product people would love, which is not just about the actual product work itself, but this whole other step of how you align it and make it useful.”

“The thing that I find most interesting is people mostly younger people - who use it as their default start for any kind of knowledge work task.“ “The most interesting thing to me is the people who just use it as the start of their workflow.”

“What people want is a model that gets to know me and gets more useful to me over time.” “We all gain from experience over the course of our lives in varying degrees, and I’d like my AI agent to gain with that experience too.”

“I think compute is going to be the currency of the future. I think it will be maybe the most precious commodity in the world, and I think we should be investing heavily to make a lot more compute.” “Intelligence is going to be more like energy or something like that, where the only thing that I think makes sense to talk about is, at price X, the world will use this much compute, and at price Y, the world will use this much compute. Because if it’s really cheap, I’ll have it reading my email all day, giving me suggestions about what I maybe should think about or work on, and trying to cure cancer, and if it’s really expensive, maybe I’ll only use it, or we’ll only use it, to try to cure cancer.”

“I think the world is going to want a tremendous amount of compute. And there’s a lot of parts of that that are hard. Energy is the hardest part, building data centers is also hard, the supply chain is hard, and then of course, fabricating enough chips is hard.”

“What are the pros and cons of the competition in the space and competing with Google, Meta, xAI, and others? I think I have a pretty straightforward answer to this that maybe I can think of more nuance later, but the pros seem obvious, which is that we get better products and more innovation faster and cheaper, and all the reasons competition is good. And the con is that I think if we’re not careful, it could lead to an increase in sort of an arms race that I’m nervous about.”

“People use extremely different definitions for what AGI is. It makes more sense to talk about when we’ll build systems that can do capability X or Y or Z, rather than when we fuzzily cross this one mile marker.”

“I expect that by the end of this decade and possibly somewhat sooner than that, we will have quite capable systems.”

“I think when a system can significantly increase the rate of scientific discovery in the world, that’s a huge deal. I believe that most real economic growth comes from scientific and technological progress.”

Tuesday, Aug. 6th: I read Bessemer’s investment memorandum on beauty vertical SaaS enabled marketplace Mindbody in which Bessemer led a $11m series B in July 2010. - Bessemer

“Mindbody launched its SaaS application in 2003 and has become the clear leader in the fitness market with over 8,500 clients, $867k in MRR and $1m in monthly revenues (as of May 31).”

“We are impressed with Mindbody’s position as the de facto standard in the yoga and pilates market (with over 30% share in both). It has gradually expanded into other verticals, including fitness centers, martial arts studios, dance studios, spas, and salons.”

“The company has grown from $4.4m in recurring revenue in 2008 to $6.8m in 2009 and is currently growing at a 60% CAGR, on plan to reach $11.4 in 2010.”

“The company has acquired customers through a capital-efficient telesales effort, with a gross profit customer acquisition payback of ~13 months.“

“We also believe ARPU and margin per account will grow as Mindbody increases its payment processing penetration and starts to drive traffic from its newly-launched consumer-facing offering.”

“Pricing ranges from $29/mth for a single professional facility to $155/mth for facilities with 20+ professionals, with an average price of $75/mth per client.”

“Our sense is that Mindbody’s product is successful due to weak competition. Most of Mindbody’s competitors are on-premise incumbents founded in the mid-1990s that lack the advantages of SaaS. The handful of SaaS competitors are poorly capitalized and sub-scale. For”

“About 30% of Mindbody subscribers and 70% of new subscribers process payments through Mindbody. This accounts for about 20% of recurring revenues and generates about $25/mth in additional MRR (bringing the total MRR per subscriber to about $100/mth).”

“Mindbody employs an efficient marketing-driven telesales model. The company relies heavily on SEM, outbound cold calling, and tradeshows to drive leads. It subsequently converts those leads by phone with an inside sales team. At a blended customer acquisition cost of ~$1,100, Mindbody typically has a 13-15 month gross profit customer payback period.”

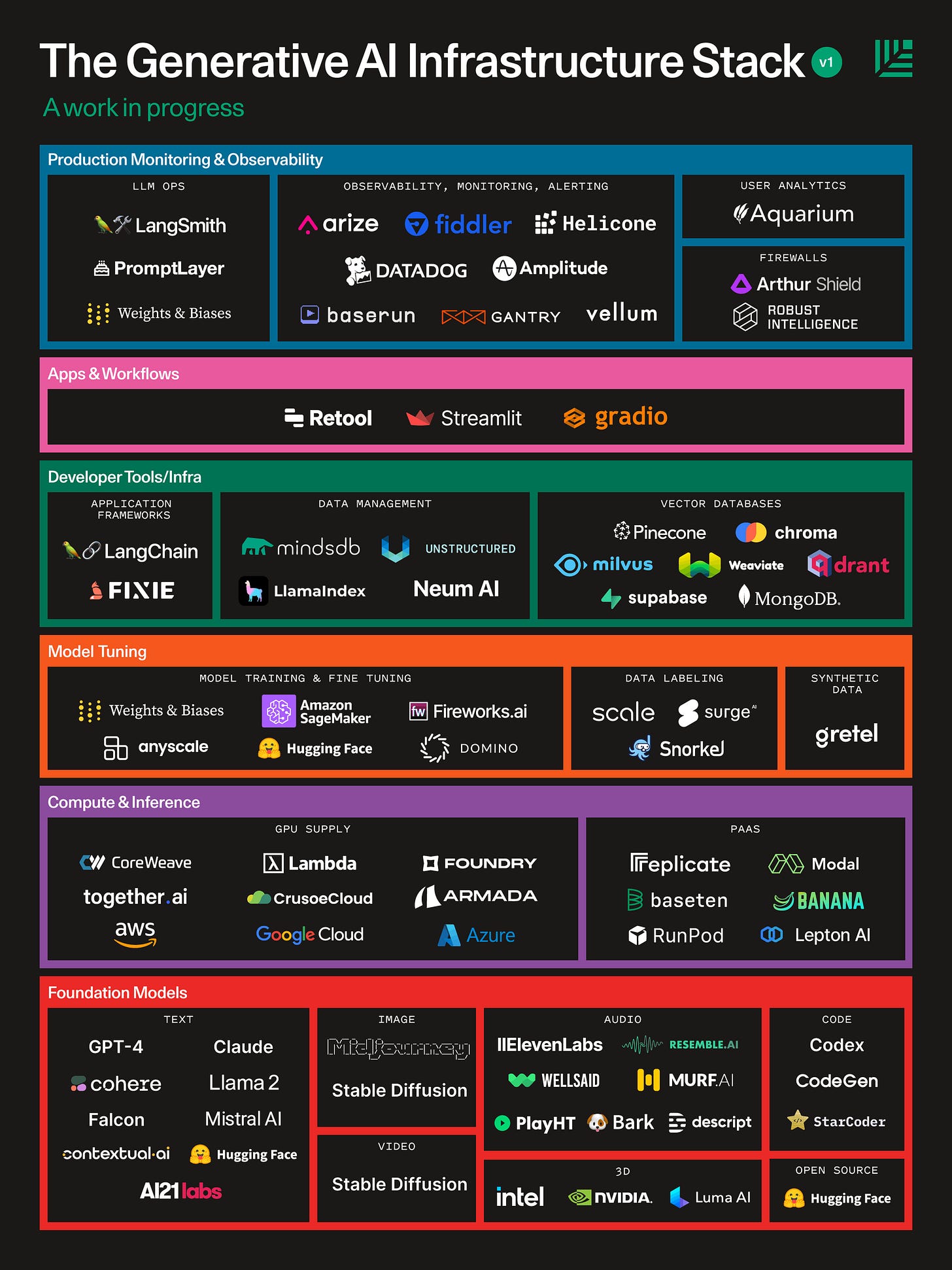

Wednesday, Aug. 7th: I read a market memorandum from Sapphire summarising the current market momentum about Gen. AI and showcasing revenues generated by Gen. AI companies. - Sapphire

"In fairness, many analysts and market participants have been warning that Generative AI is over-hyped as a technology, at least in its current state of development, and that much of the investment activity across both public and private markets appears, in their opinion, bubble-like. However, despite those calls for caution, the AI-optimist narrative seems to have held the upper hand through 2023 and the first half of 2024, creating an environment where increased AI investment was generally rewarded by investors and championed by public and private company executives. However, as we enter the final third of the year, we believe things have started to change.”

“We believe this earnings season seems to have marked a transition point as 1) investors are becoming more pointed in their questions to executives regarding the timelines for returns on the ever-increasing levels of AI infrastructure spend, and 2) executives are more comfortable plainly stating what has been obvious for a long time—they are willing to err on the side of over vs. under-investment given the intensely competitive market environment and potentially transformative impact of the technology.”

“The one way I think about it is, when you go through a curve like this, the risk of underinvesting is dramatically greater than the risk of overinvesting for us here. Even… if it turns out we are overinvesting, these are [infrastructures] which are widely useful for us, they have long useful lives, and we can apply it across and we can work through that. But I think not investing to be at the front here, I think, definitely has much more significant downsides.” - Sundar Pichai (CEO at Google)

“At this point, I'd rather risk building capacity before it is needed, rather than too late, given the long lead times for spinning up new infra projects. And as we scale these investments, we're of course going to remain committed to operational efficiency across the company.” - Mark Zuckerberg (CEO at Meta)

On AI startups being acqui-hired by hyperscalers. “Some combination of a

lack of willingness or ability to raise the type of capital required to push through to the next frontier of model development,

recognition that they were operating in rapidly commoditizing areas of the market,

inability to find product-market fit or a clear business model,

challenging hiring and retention dynamics in a hypercompetitive market, and

acknowledgment that the hyperscalers have better capital, compute, and data resources + deep research talent benches.”

“The global-scale AI data center build-out has always been good for the startup ecosystem. If the hypercalers are committed to overbuilding, it will further enhance access and reduce costs.”

Thursday, Aug. 8th: I listened to a 20VC’s podcast interview with David Cahn who is a partner at Sequoia. He invested in AI companies including Hugging Face, Runway, Supabase, Weights & Biases and Replit. - 20VC

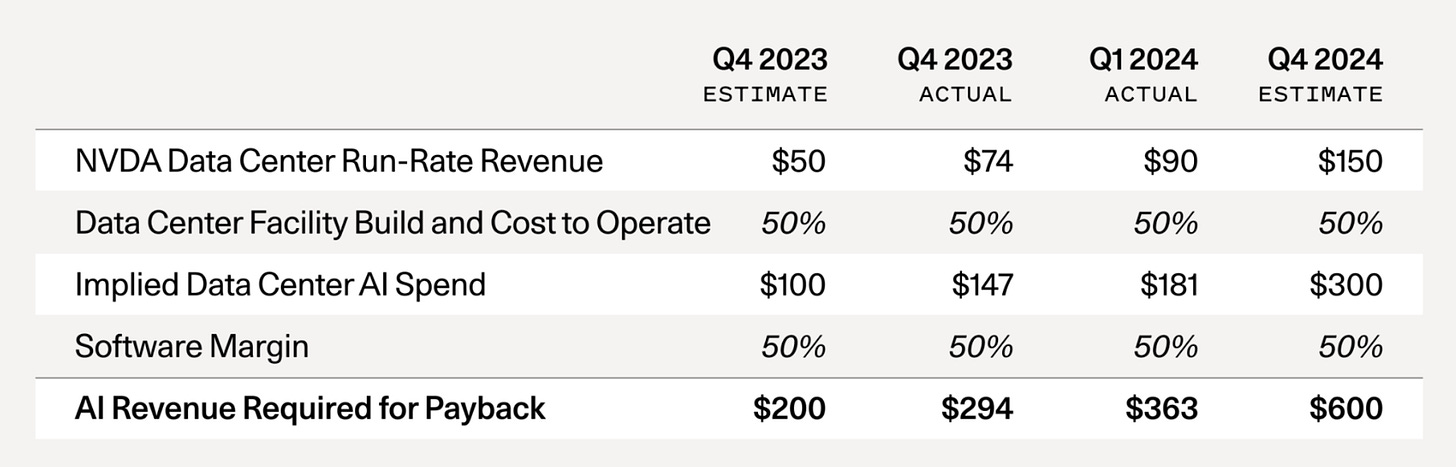

“Believing in AI and believing that infinite CapEx in the next two years are very different concepts. You can believe in AI but also believe that the amount of CapEx in the next 24 months may be difficult to pay back.”

Tech giants argue that Capex are insignificant because their balance sheet can sustain such spending. They invest in AI defensively to maintain a competitive edge on a new technological wave. “We had all these promoters coming into AI trying to say that the CapEx doesn’t matter, the budgets don’t matter. What you heard this week from Sundar Pichai (CEO at Google) and Mark Zuckerberg (CEO & cofounder at Meta) is that they understand this is risky. It’s a risk worth taking, and they have to take it.” “They’re making a strategic calculation: if we don’t make this investment, our competitors are going to get ahead.”

Tech giants form a powerful oligopoly and will go to great lengths to protect their dominance. “[Tech giants (Meta, Microsoft, Amazon, Google) are representing] one of the most powerful oligopolies in the history of business that we’re dealing with. They’re going to be willing to spend aggressively to protect their oligopoly.”

Having tech giants investing $600bn in AI Capex is fantastic for startups. “These big tech companies are producers of compute; startups are consumers of compute. If you believe there is an overproduction of compute and compute prices go down, startups win because they are buying those compute resources at lower costs. Lower cost of compute directly translates to higher gross margin for startups, higher gross margin translates directly to more valuable companies.”

Tech giants are protecting their cloud golden goose by investing massive Capex in AI. “Tech giants are too powerful; they’re erecting barriers to entry right now. In order to be an AI cloud, you need to be willing to light a bunch of money on fire. That is a pretty significant barrier to entry for new entrants. What you heard from the commentary this week is that’s not an accident. That’s pretty explicitly what they’re trying to do: protect their golden goose. The cloud business today is a $250 billion business, the same size as the SaaS sector. So of course they’re going to do everything they can to protect it.”

“If models get better and scaling laws hold, we probably need to change the architecture of the data center and new chips.”

“Amazon announced $50bn of new data centers in the last six months. These data centers are getting built, and they have to hire people to build them.”

“The question comes down to whether researchers will come up with research breakthroughs faster than we can build bigger data centers. There are a lot of people in Silicon Valley who say all that matters now is scale. Others believe we’re going to make a breakthrough in reasoning, data usage, and model efficiency. The evidence today favors scaling laws, but we hope for breakthroughs in other areas to drive progress.”

You need to make your product more useful with AI to be able to charge for AI additional features. “If you are simply injecting AI to tell investors and for marketing purposes without actual benefit to the consumer, then you’re just adding cost with no benefit, and consumers won’t pay more.”

“In businesses with barriers to entry, you have pricing power. In businesses with low barriers to entry, you don’t. It will be sector by sector. Some sectors will get commoditized and bid down to zero percent gross margin. In industries with structural low gross margins, it’s hard to raise the gross margin. In industries with higher barriers to entry, like those with data moats, those companies have pricing power. If you had pricing power before AI, you’ll have it after AI. If you didn’t have it before, you probably won’t after.”

“Vertical integration between the model layer and the data center matters. Meta, for example, controls its own data centers and is building the models. The vertical integration piece is going to matter as models get bigger and bigger. Separate teams running the data center and building the model won’t work.”

“The evidence of the last couple of years is that in order to play in the big model game, you need a cash machine that cannot be the AI business. Facebook has Instagram, Amazon has AWS, Microsoft has Azure. You need a cash machine to compete.”

“The cloud guys are playing defense; Meta is playing offense. Meta can afford to be creative and play for the future, whereas the cloud companies are stuck in a prisoners’ dilemma and have to keep investing.”

“The consumer killer use case [for Meta] hasn’t emerged yet, but they have fantastic distribution. If they can unlock that killer use case, they’ll figure out how to monetize it.”

“Alex Wang from Scale said there are three things that matter on model and AI progression: compute, algorithms, and data. Do you agree, and which do you think is the core constraint?” “I think compute, models, and data have converged. All the big guys know what they’re doing and are doing the same thing. Everyone’s using Scale, and it’s hard to argue that any of the big model companies has a data advantage. Compute is a commodity you pay for, and models have some secret sauce, but scaling laws are what matter.” “Servers, steel, and power are the three things that matter. Servers include chip innovation, steel includes construction, and power includes the energy revolution. The industrial nature of AI is driving these changes.”

“The lesson from those companies is to listen to what people do, not what they say. When I called customers on Marqeta, they said it’s too expensive and they’ll build it themselves, but they never churned. The same with UiPath and Snowflake. Customers might say they’re going to churn, but if they’re paying for it, it’s for a reason.”

“The bar for success is so high that unless the company [you picked as one of the 1-2 investments you do per year] is one of the 50 most important companies in the world, you haven’t done your job well.”

Friday, Aug. 9th: I listened to a No Priors podcast episode with Elad Gil and Sarah Guo. - No Priors

“Daron Acemoglu (MIT professor) argues that AI will impact less than 5% of all tasks and that the trillion dollars spent on training models is a waste because AI won’t solve complex problems.”

“Jim Covello (global equity research at GS) contrasts AI with the internet, stating that while the internet disrupted expensive things from the beginning, AI is expensive from the start, and any efficiency gains will be competed away, meaning companies won’t benefit in the long run.”

“There’s a broader misconception about how this technology works and its implications.” “Many people are stuck in the old AI world, treating it like traditional machine learning without realizing the breakthroughs in Transformer-based models and other architectures that provide different functionalities and features.”

Enterprise adoption is still in its early days. Elad breaks down AI adoption into three segments: (i) AI vendor tools that I can purchase, (ii) internal AI tools and (iii) customer centric AI tools. Everything that has happened today is mostly AI vendor tools that I can purchase. The big wave of enterprise adoption is still ahead of us.

“AI is opening up markets in unexpected ways through new capabilities, easy access via APIs (no need to do ML ops and ML trainings), and mandates for AI adoption (many companies have a mandate to do AI). These drivers make markets more accessible than they’ve been in decades.”

On AI-driven buyouts:

“There are situations where you actually want to do an AI driven buyout because it's better to have somebody take over the asset and dramatically change the cost structure or the leverage per employee using AI.”

“Today, there are really odd things you can suddenly do just because the buying behavior has shifted. Everybody has an AI mandate and they're willing to consider products that they wouldn't be able to consider without AI.”

“With AI driven buyouts, you are shortcutting the change management process for industries that can be like dramatically automated with these new capabilities by just controlling them.”

“You are short cutting adoption of technology which in some industries may just not happen very fast. You are short cutting change management. But you are also taking over an asset and you are able to entirely change how a subset of the organisation functions relative to AI (cf. do something similar to what Klarna did in CS). You can cut headcount while drastically improved the quality of the function. There are a lot of industries where a lot of the costs are associated or leverage on time.”

“You need both PE expertise and tech-savvy to make AI-driven buyouts work. The early tech-enabled buyouts often failed because they added only a thin software veneer. Successful examples now involve deep integration of AI, significantly altering operations and leveraging AI more than just financial engineering.”

“The traditional enabled buyout playbook which largely didn't work is based on a PE person come in and buying a bunch of companies to roll them up and add a thin layer of software on top of it to claim that you have now a tech company.” It’s just an arbitrage play on the difference between a single asset and a platform with multiple assets as well as the difference between a PE business and a tech business.

On incubation:

“Incubation makes sense when you have visibility into an opportunity set or a customer set that others do not. Moreover, the expertise set required to make a company work is extremely hard to get organically.”

“There's a handful of firms that have actually done very good incubations because they are vertically specialised. They understand the dynamics of their industry. They have proprietary access to customers and product ideas that will take off in their industry.”

“With AI the odd thing that's happening is that certain subsets of the market are incredibly crowded with a dozen of companies doing the same thing. Besides, you have these areas where it's really obvious to build stuff and they're just wide open and nobody's doing anything.”

In the public markets, Elad Gil would focus on investing in 3 categories of companies: (i) those poised to become the next Magnificent 7 compounding high growth over a decade or more (ii) established big tech firms that will effectively capitalize on the AI wave (e.g. Apple replacing all phones thanks to an AI powered phone)? (iii) companies where adding AI offers limited product improvement, making them defensible.

Saturday, Aug. 10th: Ben Evans wrote about the current hype surrounding Gen. AI. He draws parallels to past technology cycles, suggesting that LLMs, much like the Dotcom bubble or early cloud computing, may be experiencing an inflated hype phase. While these models appear to be finished products ready for wide deployment, they lack the refinement necessary for broader, practical applications. - Ben Evans

“The future can take a while - it took more than 20 years for 20% of US retail to move online. People forget this now, but the iPhone took time as well. The same, of course, applies to the enterprise. If you work in tech, cloud is old and boring and done, but it’s still only a third or so of enterprise workflows 25 year after Marc Benioff tried to persuade people to do software in the browser.”

“It does take time to change your habits and ways of thinking around an entirely new kind of tool. I’ve also argued that an LLM by itself is not a product - it’s a technology that can enable a tool or a feature, and it needs to be unbundled or rebundled into new framings, UX and tools to be become useful. That takes even more time.”

“Last summer Accenture proudly announced that it had already done $300m of ‘generative AI’ work for clients… and that it had done 300 projects. Even an LLM can divide 300 by 300 - that’s a lot of pilots, not deployment.”

“Morgan Stanley’s latest CIO survey says that 30% of big company CIOs don’t expect to deploy anything before 2026.”

Monday, Aug. 12th: I listened to a Colossus’ podcast episode with Martin Casado who is GP at a16z. - Colossus

Gen. AI will have a greater impact by driving an explosion of new consumer experiences rather than by solving enterprise automation problems.

“When it comes to content creation like any sort of digital creation, the marginal cost has gone to zero.”

“Just like in the early days of the Internet, I don't think we know what that's going to look like.”

“One way to think about this current AI wave is the marginal cost of content creation is going to 0 and to some extent, natural language reasoning is going to 0.”

“I believe that at least 1/3 of the money made by LLMs are from people that are just playing with a LLM-based app focused on creating an emotional connection (e.g. virtual friend, new style of video game, virtual girlfriend).”

“A lot of those language reasoning tasks (e.g. analysing a contract with a LLM instead of a law firm) are going to go to these models because they do a pretty good job of the language reasoning piece.”

“If you actually look, the progress for AI has always been incredibly steady. It has always been up and to the right. We've solved many problems. We didn't think that you could solve.”

“People use it and then they get bored and it drops off. They have to launch another model that goes up and it drops off. They have to launch another model. And so then you're in this long march, let's call it the waves where you're riding these waves of new models and later on it sucks.”“At that point, that's when the companies have to find a traditional moat. There's a lot of moats, but that's when you have to create the moat and get off the treadmill. I think if you don't get off the treadmill, this all converges basically to the same model.”

“I think it's amazing that Facebook releases LLama, but when you release open source without actually having an enterprise sales force and go-to-market engine around it, I think it kind of lands people to do whatever they want, but you don't ever push a product and pull it in the way that an enterprise buyer would want.”

“Market annealing is for category creation. If you're in a mature market, just go build something better, people already know how to use it. But in new markets, the customer doesn't know the value that you have, they haven't a clue. They don't know how to use it. They don't have the workflow. So there's a ton of work take you're actually hammering the market and making it so they can accept it.”

“Gen. AI is a new breakthrough, in my opinion. It's got its own set of properties. And those properties are amazing for certain problems and not amazing for other problems. I personally am very excited and attracted to the problem that gets a great fit for the emotion companionship. I think it's a great fit for the creativity problem. I think it's a great fit for the language reasoning problem, it's a great fit for those.”

Tuesday, Aug. 13th: Microsoft faced a significant challenge when OpenAI’s CEO Sam Altman was unexpectedly fired, leading Satya Nadella to swiftly intervene to stabilize the situation. In response, Microsoft has diversified its AI investments, reducing reliance on OpenAI by partnering with other AI startups (inc. Mistral, Inflection and G42) and developing its own models. - FT

“For Microsoft and its investors, the incident was a reminder of how central OpenAI had become to its strategy: the growth of artificial intelligence.”

“In the eight months since the board dispute, the tech giant has worked to execute an AI strategy independent of Altman’s start-up. It has diversified its investments and partnerships in generative AI, built its own smaller, cheaper models, and hired aggressively to develop its consumer AI efforts.”

“The raft of departures mean that nine of the start-up’s 11 co-founders are currently not working there.”

“More controversially, the so-called “acqui-hire” of Inflection founder Suleyman and most of the start-up’s staff in March set Microsoft on a path to confrontation with its biggest AI partner. The combative former Google DeepMind executive, who left that company having developed a reputation as a bully, was put in charge of a new internal AI unit at Microsoft and tasked with building consumer-facing products that would compete with those from Altman’s OpenAI.”

“The US Federal Trade Commission is probing whether the Inflection deal was structured to circumvent antitrust laws, essentially gutting the smaller company of talent and software, while avoiding the formal scrutiny a full takeover would have brought.”

“Despite the scrutiny, the Inflection deal has become a model for other tech giants seeking talent. In June, Amazon hired most of the staff at AI-agent start-up Adept and paid $330mn to license its intellectual property. Last week, Google rehired the founder of chatbot maker Character.ai and paid more than $2bn to license its technology and cash out existing investors.”

“Azure is OpenAI’s exclusive cloud provider and will be paid for whatever computing power it uses. Microsoft is also agnostic about which AI models are used, so long as they are accessed through its cloud.”

“We have over 1,600 models available through Azure AI . . . the main thing we want is people to be building and using them on Azure.”

“Azure AI now had 60,000 customers, up more than 60 per cent from a year ago.”

Wednesday, Aug. 14th: I read Sequoia’s multiple blog posts on AI. - David Cahn 1, David Cahn 2, David Cahn 3, Sonya Huang

“How much of this CapEx build out is linked to true end-customer demand, and how much of it is being built in anticipation of future end-customer demand?”

“During historical technology cycles, overbuilding of infrastructure has often incinerated capital, while at the same time unleashing future innovation by bringing down the marginal cost of new product development.”

“I noticed a big gap between the revenue expectations implied by the AI infrastructure build-out, and actual revenue growth in the AI ecosystem, which is also a proxy for end-user value.”

“The supply shortage has subsided: Late 2023 was the peak of the GPU supply shortage. Startups were calling VCs, calling anyone that would talk to them, asking for help getting access to GPUs. Today, that concern has been almost entirely eliminated. For most people I speak with, it’s relatively easy to get GPUs now with reasonable lead times.”

“Nvidia reported in Q4 that about half of its data center revenue came from the large cloud providers. Microsoft alone likely represented approximately 22% of Nvidia’s Q4 revenue. Hyperscale CapEx is reaching historic levels. These investments were a major theme of Big Tech Q1 ‘24 earnings, with CEOs effectively telling the market: “We’re going to invest in GPUs whether you like it or not.” Stockpiling hardware is not a new phenomenon, and the catalyst for a reset will be once the stockpiles are large enough that demand decreases.”

“The Information recently reported that OpenAI’s revenue is now $3.4B, up from $1.6B in late 2023.” “Outside of ChatGPT, how many AI products are consumers really using today? Consider how much value you get from Netflix for $15.49/month or Spotify for $11.99. Long term, AI companies will need to deliver significant value for consumers to continue opening their wallets.”

“Earlier this year, Nvidia announced their B100 chip, which will have 2.5x better performance for only 25% more cost. I expect this will lead to a final surge in demand for NVDA chips. The B100 represents a dramatic cost vs. performance improvement over the H100, and there will likely be yet another supply shortage as everyone tries to get their hands on B100s later this year.”

“GPU computing is increasingly turning into a commodity, metered per hour. Unlike the CPU cloud, which became an oligopoly, new entrants building dedicated AI clouds continue to flood the market. Without a monopoly or oligopoly, high fixed cost + low marginal cost businesses almost always see prices competed down to marginal cost (e.g., airlines).”

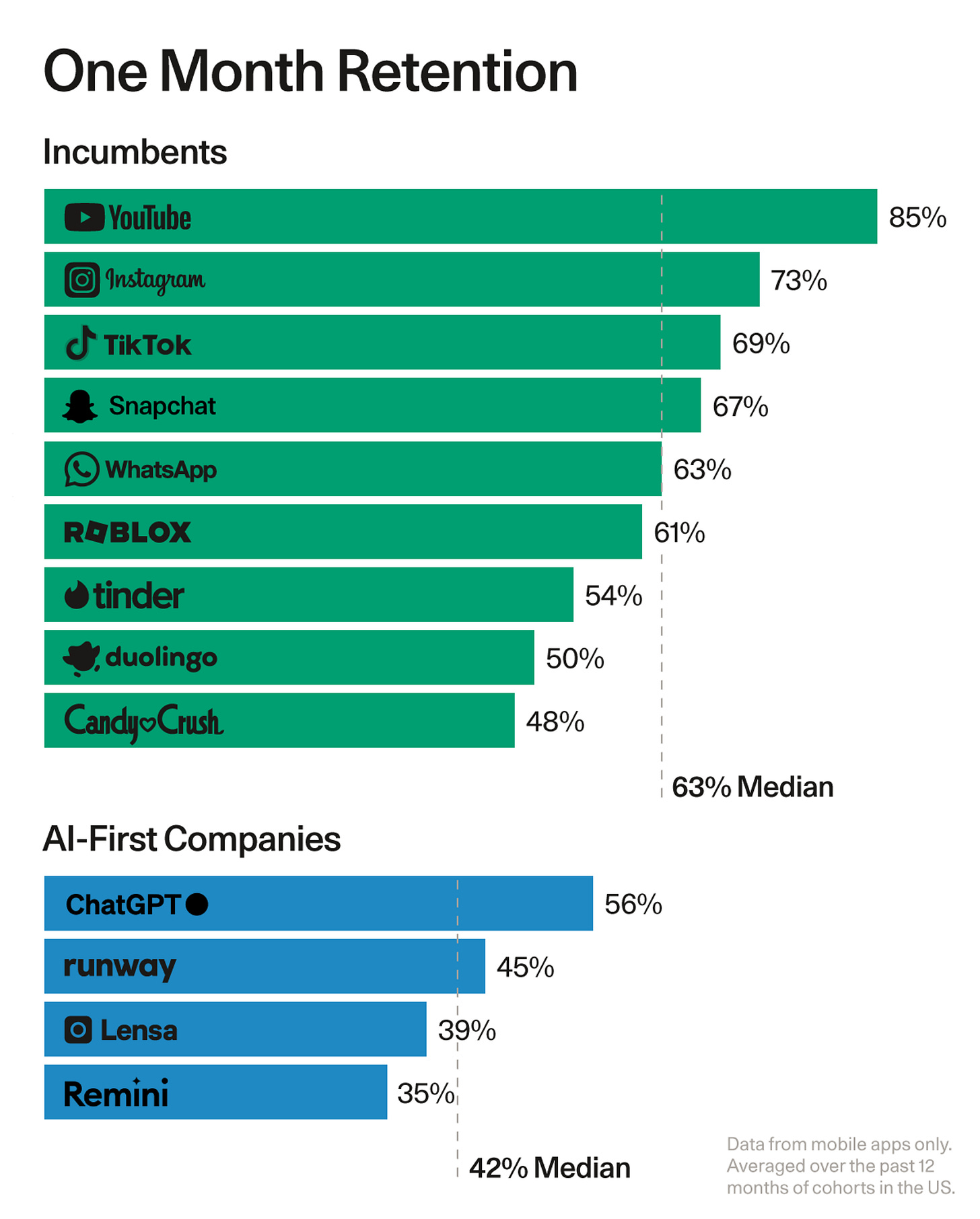

“Perhaps most worryingly, a whisper began to spread within Silicon Valley that generative AI was not actually useful. The products were falling far short of expectations, as evidenced by terrible user retention.”

“These applications are different in nature than the first apps out of the gate. They tend to use foundation models as a piece of a more comprehensive solution rather than the entire solution. They introduce new editing interfaces, making the workflows stickier and the outputs better. They are often multi-modal.”

“We still believe that there will be a separation between the “application layer” companies and foundation model providers, with model companies specializing in scale and research and application layer companies specializing in product and UI. In reality, that separation hasn’t cleanly happened yet. In fact, the most successful user-facing applications out of the gate have been vertically integrated.”

“The first killer apps emerged. It’s been well documented that ChatGPT was the fastest application to reach 100M MAU—and it did so organically in just 6 weeks. By contrast, Instagram took 2.5 years, WhatsApp took 3.5 years, and YouTube and Facebook took 4 years to reach that level of user demand. But ChatGPT is not an isolated phenomenon. The depth of engagement of Character AI (2 hour average session time), the productivity benefits of Github Copilot (55% more efficient), and the monetization path of Midjourney (hundreds of millions of dollars in revenue) all suggest that the first cohort of killer apps has arrived.”

“Generative AI’s biggest problem is not finding use cases or demand or distribution, it is proving value.”

“Amara’s Law—the phenomenon that we tend to overestimate the effect of a technology in the short run and underestimate the effect in the long run—is running its course.”

“Model size is the number one driver of performance. As a result, the next generation of models are aiming for a 10x increase in model scale to 300k GPUs. To house one of these models, you need to build an entire new data center. This changes AI in two fundamental ways: First, it changes the lead time between models. If before you could train your model in 6 to 12 months, now you need to add 18 to 24 months of construction time before you can actually start training. Second, it changes the source of maximum competitive advantage. In the new era, construction efficiency may matter more than research breakthroughs.”

“Today, five companies have arrived at the starting line in this new race toward data center scale-up: Microsoft/OpenAI, Amazon/Anthropic, Google, Meta and xAI.”

Thursday, Aug. 15th: I listened to a Lex Fridman’s podcast episode with Yann Lecun. - Lex Fridman

Autoregressive LLMs are not the way we’re going to make progress towards superhuman intelligence for 2 main reasons:

“(i) The capacity to understand the physical world, (ii) the ability to remember and retrieve things, persistent memory, (iii) the ability to reason, and (iv) the ability to plan. Those are four essential characteristics of intelligent systems or entities, humans, animals. LLMs can do none of those or they can only do them in a very primitive way and they don’t really understand the physical world.”

“It seems like an enormous amount of knowledge that LLMs can accumulate, but then you realize it’s really not that much data. […] Through sensory input, we see a lot more information than we do through language, and that despite our intuition, most of what we learn and most of our knowledge is through our observation and interaction with the real world, not through language.”

Moravec paradox. “We have LLMs that can pass the bar exam, but then they can’t learn to drive in 20 hours like any 17-year old, they can’t learn to clear out the dinner table and fill up the dishwasher like any 10-year old can learn in one shot.”

“The way LLMs are trained is that you take a piece of text, you remove some of the words in that text, you mask them, you replace them by blank markers, and you train a genetic neural net to predict the words that are missing. If you build this neural net in a particular way so that it can only look at words that are to the left or the one it’s trying to predict, then what you have is a system that basically is trying to predict the next word in a text.”

“A LLM doesn’t think about its answer really. It retrieves it because it’s accumulated a lot of knowledge. So it can retrieve some things, but it’s going to just spit out one token after the other without planning the answer.”

“The world is incredibly more complicated and richer in terms of information than text. Text is discrete, video is high-dimensional and continuous.”

“All the systems that attempt to predict missing parts of an image or video from a corrupted version of it, basically, so take an image or a video, corrupt it or transform it in some way, and then try to reconstruct the complete video or image from the corrupted version, and then hope that internally, the system will develop good representations of images that you can use for object recognition, segmentation, whatever it is. That has been essentially a complete failure and it works really well for text.”

About JEPA (Joint-Embedding Predictive Architecture).

“Instead of training a system to encode the image and then training it to reconstruct the full image from a corrupted version, you take the full image, you take the corrupted or transformed version, you run them both through encoders. Then you train a predictor on top of those encoders to predict the representation of the full input from the representation of the corrupted one.”

“The big question is how do you train something like this? And until five years ago or six years ago, we didn’t have particularly good answers for how you train those things.”

“What has changed in the last three, four years is now we have methods that are non-contrastive. So they don’t require those negative contrastive samples of images that we know are different. You turn them on you with images that are different versions or different views of the same thing, and you rely on some other tricks to prevent the system from collapsing. And we have half a dozen different methods for this now.”

What’s the difference between JEPA and generative architectures like LLMs?

“LLMs or vision systems that are trained by reconstruction generate the inputs. They generate the original input that is non-corrupted, non-transformed, so you have to predict all the pixels, and there is a huge amount of resources spent in the system to actually predict all those pixels, all the details.”

“In a JEPA, you’re not trying to predict all the pixels, you’re only trying to predict an abstract representation of the inputs. And that’s much easier in many ways.”

“What the JEPA system, when it’s being trained, is trying to do is extract as much information as possible from the input, but yet only extract information that is relatively easily predictable.”

“It allows the system to essentially learn an abstract representation of the world where what can be modeled and predicted is preserved and the rest is viewed as noise and eliminated by the encoder.”

“It lifts the level of abstraction of the representation. If you think about this, this is something we do absolutely all the time.”

“We were lazy with language because we already got the abstract representation for free.”

LLMs.

LLMs are exploiting self supervised learnings and LLMs are a good demonstration that they actually work.

“It allowed us to create systems that understand language, systems that can translate hundreds of languages in any direction, produce summaries, answer questions and produce text.”

“The auto regressive trick where you constrain the system to not elaborate a representation of the text from looking at the entire text, but only predicting a word from the words that are come before.”

“We just assume that if a system is fluent in manipulating language, then it has all the characteristics of human intelligence, but that impression is false. We’re really fooled by it.”

“LLMs are impressive. They can do a lot of useful things. There’s a whole industry that is being built around them. They’re going to make progress, but there is a lot of things they cannot do, and we have to realize what they cannot do and then figure out how we get there.”

“There’s ample evidence that we’re not going to be able to learn good representations of the real world using generative model. So I’m telling people, everybody’s talking about generative AI: “if you’re really interested in human level AI, abandon the idea of generative AI.””

AI hallucinations

“Why hallucinations happen from LLMs and to what degree is that a fundamental flaw of LLMs?” “Because of the autoregressive prediction, every time a LLM produces a token or a word, there is some level of probability for that word to take you out of the set of reasonable answers.””If you assume, that the probability of such error is that those errors are independent across a sequence of tokens being produced. What that means is that every time you produce a token, the probability that you stay within the set of correct answer decreases and it decreases exponentially.”

“The way you can correct for this is that you fine tune the system by having it produce answers for all kinds of questions that people might come up with.” “People are people, so a lot of the questions that they have are very similar to each other, so you can probably cover 80% or whatever of questions that people will ask by collecting data and then you fine tune the system to produce good answers for all of those things.”

“There is the enormous set of prompts that you have not covered during training.”

“There’s a very, very long tail of things that people will ask and you can fine tune the system for the 80% or whatever of the things that most people will ask. And then this long tail is so large that you’re not going to be able to fine tune the system for all the conditions.” “In the end, the system ends up being a giant lookup table essentially, which is not really what you want, you want systems that can reason, certainly that can plan.”

Reasoning in AI

“The type of reasoning that takes place in LLM is very, very primitive, and the reason you can tell is primitive is because the amount of computation that is spent per token produced is constant.”

“This is not the way we work, the way we reason is that when we’re faced with a complex problem or a complex question, we spend more time trying to solve it and answer it because it’s more difficult.”

“It’s the same difference as the difference between what psychologists call system one and system two in humans. That’s system one, so all the things that you do instinctively without really having to deliberately plan and think about it. There is all the tasks where you need to plan. this type of deliberate planning, which uses your internal world model, that’s system two, this is what LMS currently cannot do.”

“It’s not going to be a regressive prediction of tokens, it’s going to be more something akin to inference of little variables in what used to be called probabilistic models or graphical models.”

“An energy based model can be used to measure to what extent an answer is a good answer for a prompt.” “it’s much better to do an optimization in continuous space where you can do gradient and descent as opposed to generate tons of things and then select the best, you just iteratively refine your answer to go towards the best, that’s much more efficient.

Reinforcement Learning with Human Feedback

“I think it’s use should be minimized because it’s incredibly inefficient in terms of samples.”

“The way to adjust your word model even in advance is to explore parts of the space where you know that your world model is inaccurate, that’s called curiosity basically, or play.”

Friday, Aug. 16th: I listened to Bill Gurley and Brad Gerstner on AI and on the public market volatility. - BG2

“If we put this in context, since Q1 of 2023, stocks are up 60% after everyone was nervous about a hard landing. That’s a huge move in just six quarters, and some stocks like Nvidia have more than doubled. So, what’s happened this week and last month is more of a run-of-the-mill, healthy consolidation of these big gains.”

“As prices go up, risk-reward gets worse unless numbers keep going up, and that hasn’t been the case in 2024. We’ve seen five key forces: taxes, earnings misses or just skinny beats, the potential AI air pocket, and recently, two more emerged—geopolitical factors like the Japanese carry trade unwind and expanding tensions in the Middle East. These create a toxic brew of uncertainty, which raises the discount rate and lowers multiples on stocks.”

“When you have negative rates, it leads to a carry trade—borrowing in yen and investing in rate-yielding assets around the world, pocketing the spread. But when the Bank of Japan raised rates, people had to unwind these trades, which meant selling U.S. bonds and stocks around the world, adding to the market’s volatility.”

“The first thing people do when things get tight is defer buying a couch—that’s what Wayfair is seeing. The second thing is they defer their family trip. When Airbnb, Disney, the cruise lines, and airlines start seeing downturns, you know the consumer is really getting pressured.”

“If you look at the S&P, 80% of companies have now reported, and 48% of them had a positive revenue beat. That sounds like a lot, but it’s the lowest level since Q3 2019.”

“We’re facing a different tax situation in this election compared to prior ones. The corporate tax cuts were made permanent, but individual tax cuts are set to expire in 2025. If a new administration comes in and those cuts expire, it could be a big risk to the market. The personal income tax reductions from the Trump tax cuts, if reversed, would pull $150bn out of the economy, which could be a significant headwind for the markets.”

“If the risks we’re discussing materialize—recession, geopolitical events, etc.—the market could correct by another 10-15%, bringing us back to where we were on January 1. Investors need to ask themselves if the world is really that much better than it was at the beginning of the year to justify the current prices.”

About the recent trend of “take-unders,” where companies like Inflection and Character AI are being acquired in non-standard deals.

“Founders like Noam Shazeer at Character.AI might be looking at the daunting amount of capital required to compete with Meta and Google. On the other hand, big tech companies are willing to pay big money for talent but can’t do outright acquisitions due to regulatory concerns. So, they’re finding creative ways to structure these deals. I’m not sure how they work exactly, but it seems like investors are earning 2-3x on their money, which is interesting.”

“These take-unders seem to be happening to avoid federal regulatory scrutiny. These deals are structured in a way that might involve double taxation risks, and the entity often stays around even after the core team has left. It’s not clear whether these deals are preferable for venture investors, but they’re certainly raising eyebrows.”

Saturday, Aug. 17th: I listened to a Building with AI’s podcast episode with Tomasz Tunguz who is founder and GP at Theory Ventures. - Building with AI

“I’m the founder and general partner of a firm called Theory Ventures. We are a team of nine people focused on data, investing in three different categories: the modern data stack, applications and infrastructure for AI, and blockchains, which we view as databases.”

“Compared to previous technological waves, there are two major differences with Gen. AI. First, incumbents have an advantage. Startups have had negative time to launch because companies like Google already have 60% of all the PhDs, all the data, and distribution. Second, unlike the internet or mobile apps, which were easier to build and implement, AI is non-deterministic and chaotic. This unpredictability changes product design and the sales process because buyers now have to consider that the AI might behave unpredictably.”

“We’ll see two waves of AI application development. We’re in the first peak, where large language models are viewed as the solution itself. But we’re realizing that LLMs alone aren’t enough—we need security systems, monitoring systems, classifiers, and ways to eliminate errors and hallucinations to make these systems truly effective.”

“Historically those ML systems have been for post hoc analysis (e.g. doing a churn prediction and then using that to inform how the customer success team operates) but increasingly those that data is now being used in production systems (e.g. Netflix’s content recommendation system).”

“LLMs are unpredictable across versions, with up to 40% of prompts breaking when upgrading from one version to another. This unpredictability makes it difficult to guarantee a consistent user experience. The closest analogy might be search engine quality at Google. Like search engines, LLMs require both machine and human evaluations to assess quality. Every company will need its own set of evaluations, which are highly context-specific. In the future, we may see analytics vendors that create synthetic evaluation tests based on how users engage with AI. This feedback loop between analytics and evaluations will be crucial, but it’s still early days.”

“Typically, new machine learning innovations start at about 75% accuracy and take 10 to 15 years to improve to 95-98%. There’s always an initial over-promise, a collapse, and then a comeback. The key is to match the use case to the risk. For instance, consumers love AI for tasks like generating images, where the range of acceptable outcomes is broad, and the cost of being wrong is low. But it would be a mistake for finance or healthcare professionals to ignore these technologies.”

“What is the framework to identify suitable use cases for LLMs?

Let’s structure this in three ways. First, consider implementation: there’s co-pilot (sentence completion) and agents (full task automation). Sentence completion can accelerate work by 50% without requiring a high level of trust. Task automation is still early and carries more risk.

Second, consider accuracy. If the system is 75% accurate, is that valuable? In creative tasks like writing, an 80% solution is useful because it’s easier to edit than create. But in areas like credit scoring, 75% accuracy is useless.

Finally, look for rote toil—repetitive, unattractive work that no one wants to do, especially in industries with demographic shifts or labor shortages. For example, long-haul trucking, BDR roles, and security analysis are all areas ripe for AI automation. The key is finding tasks where an 80% solution is sufficient and the work is highly repetitive.”

“The first industries were computer software (like software writing with Copilot), legal, and marketing copy. Now, we’re seeing it in financial analysis, accounting, and security. These are the areas where we’re seeing the most innovation and investment.”

“Microsoft is doing an excellent job. They’ve built a $5bn AI business in 18 months by infusing AI into all their products.”

“In the next wave, we’ll see a linkage between software and hardware. These models are expensive to run, but your laptop or phone has a powerful GPU. Pushing models to the edge (on-device) will give companies with both software and hardware a huge advantage.”

“It’s also important for incumbents to support as many model families as possible. Microsoft supports 50 different models, which is crucial because enterprises are starting to build on Azure, perceiving it as the place where the best models are available first. Supporting a broad range of models and fostering strategic relationships with LLM creators will be key.”

"Gartner published a study showing that customer acquisition costs for software companies have increased 60% over the last five years. This means a small but compounding advantage in customer acquisition costs can give you disproportionate market share.”

“If you’re a startup looking to disrupt a specific industry with an AI-native approach, how would you set up to maximize your odds of unseating an incumbent? The next generation of startups needs to offer more than just an AI feature. You need to create some form of intellectual property or competitive advantage that’s unique, whether it’s proprietary data, better revenue generation, or cost reduction.”

“Innovation equals invention plus distribution. As the cost of invention goes to zero due to generative AI, distribution becomes much more important.”

Sunday, Aug. 18th: I listened to a More or Less’ podcast episode with Vinod Khosla on AI. - More or Less

OpenAI’s partnership with Apple:

“Apple’s AI integration demonstrates that computers will learn humans instead of humans needing to learn computers.”

“Obviously, from an OpenAI perspective, it clearly establishes where OpenAI is in the competition for the best LLMs. Many people wanted that business, and many things were evaluated. It’s rewarding to see where Apple thinks the best AI is.”

“I think the bigger thing is how we interface with computers, and Siri is the beginning of what will evolve over time into agentic interfaces for humans.”

“Apple can do a lot on-device with small models.” “I think what on-device small models do will be different from what the large models do.” “If you want human-level intelligence from a model, it’ll be a big model. But I don’t think small models substitute for big models. They do some things really well, though. If you’re talking to something with very low latency, you want a shorter path, so that’d be a small model on-device.”

“My advice to all our startups is to ignore the cost of compute because any assumption you make, any dollar you spend on optimizing your software, will be worthless within the year. So forget about it. Rely on competition in the marketplace between Claude, Gemini, and OpenAI to reduce that cost to a point where it doesn’t matter. Below a certain level, it doesn’t matter. If it’s 10% or less of that, it doesn’t matter.”

“It is costing an order of magnitude more to train, and that’s why I don’t think open-source models will be viable because of the training costs. But once you’re trained, you want as broad a set of usage as possible for two reasons. One, you want the most revenue from it, and the lowest-cost models will get the most revenue. But more importantly, there’s huge data generation for training the next generation of the model.”

“I’m pretty convinced that in the next few years, revenue isn’t the important metric [for LLMs]. Of course, you don’t want to lose so much money you can’t afford it, but you’re not trying to make tons of money. You’re trying to get tons of usage and tons of data from that usage to learn to be a better model.”

“I do think there’s a lot to be gained in intelligence [for LLMs]—whether it’s reasoning, probabilistic thinking, or pattern matching. These models have lots of headroom to get better, and I think we’ll see stunning advances practically every year.”

“If you’re building foundation models and trying to compete with OpenAI and Google, that’s not a great place to be. LLMs will belong to the large players.”

“I don’t think we’re anywhere near done with fundamental techniques. We’re exploiting the Transformer model more and more, but there are other models to be developed. It’s just that everybody’s scared to invest in anything other than Transformer models. I’m very focused on esoteric things.”

“At the next level up, the applications, there’s huge opportunity. One of my big forecasts is that almost all expertise will be free. Whether you’re talking about a primary care doctor, a teacher, a structural engineer, or an oncologist, there are so many areas of expertise—hundreds, if not thousands—each of which will be a very successful venture.”

How to build a defensible business in the Gen. AI world? “The idea that one model will do everything is incorrect. Workflows differ in every area.”

“There’s room for seed investments, but following what everyone else is doing is a bad strategy. When somebody leaves DeepMind and gets $2 million just because, that’s not a good strategy.”

“We spend a lot of time evaluating whether a business could be railroaded by large models or become obsolete as a feature. About 80% of our seed investments are below a $25m post-money valuation. Occasionally, we step up to something like Adept, even with zero revenue, because we have a certain belief system. About 80-90% of the billion-dollar valuations we pass on, but there’s 10-20% we’ll invest in, like Devon or Replit. Occasionally, we’ll make small, token investments for relationships, but we’re very disciplined about it.”

AI will create a deflationary economy. “It will be a hugely deflationary economy. If you take a million doctors and reduce them to just 200k and 80% has gone then you're talking about $250bn less spent on healthcare even if you can increase healthcare’s usage.”

Monday, Aug. 19th: Carta published a report on VC fund performance with data from 1.8k US funds. - Carta

Funds are deploying capital at a slower pace. “Funds in the 2022 vintage have deployed about 43% of their committed capital at the 24 month mark, the lowest share of any analyzed vintage. Prior vintages ranged from 47%-60% after 24 months.”

Graduation rates are declining. “30.6% of companies that raised a seed round in Q1 2018 made it to Series A within two years. Only 15.4% of Q1 2022 seed startups did so in the same timeframe.”

Tuesday, Aug. 20th: The Economist wrote about investors becoming increasingly skeptical about the profitability of AI. - The Economist

“A growing number of investors worry that AI will not deliver the vast profits they seek.”

“A growing number of observers now question the limitations of LLMs, which power services such as ChatGPT.”

“According to the latest data from the Census Bureau, only 4.8% of American companies use AI to produce goods and services, down from a high of 5.4% early this year.”

““The future of AI is just going to be like every other technology. There’ll be a giant expensive build-out of infrastructure, followed by a huge bust when people realise they don’t really know how to use AI productively, followed by a slow revival as they figure it out,” says Noah Smith, an economics commentator.”

The “hype cycle,” a concept from Gartner, suggests that new technologies go through phases of euphoria, disillusionment, and eventual mainstream adoption, but the applicability of this cycle to AI is debatable. Historical examples like railways and the internet show how initial hype led to massive infrastructure build-outs and eventual widespread adoption, but many technologies have not followed this pattern. Data from Gartner and additional analysis suggest that the hype cycle is rare, with only a fifth of breakthrough technologies following this path, and many never recovering after initial enthusiasm fades.

Wednesday, Aug. 21st: I read a portrait on Palmer Lucky who is Anduril’s cofounder and CEO. - Tablemag

After creating Oculus Rift, Palmer Luckey sold the company to Facebook for $2bn but was later fired due to controversial political donations. Palmer Luckey’s current company, Anduril, develops advanced defense technologies like autonomous drones and AI-powered surveillance systems, now valued at $14bn.

“You can’t make everyone happy, and if you try, you’ll end up with a bland, meaningless existence.”

“The future of national security isn’t about building more tanks and planes; it’s about leveraging AI, autonomy, and technology that can outthink the enemy.”

“Disruption isn’t just about breaking things; it’s about building something better in their place.”

“Innovation happens when you’re willing to break the rules and challenge the status quo.”

Thursday, Aug. 22nd: I read Contrary Research’s deep-dive on Mistral. - Contrary

“Mistral AI develops open-source foundation models. These are an alternative to closed, proprietary AI models like those developed by OpenAI, Google, and Anthropic. The company also focuses on developing more efficient and cost-effective models, targeting the “performance-cost frontier.”

“The company also offers “optimized” versions of its AI models via its developer platform, for which it charges following a usage-based business model.”

“La Plateforme is Mistral AI’s developer platform. This platform leases “optimized” versions of the company’s models to developers through generative endpoints, accessible via an API. Its goal is to offer efficient deployment and tailored customization for various use cases.”

“Le Chat is Mistral AI’s chatbot service, functionally equivalent to OpenAI’s ChatGPT but powered by Mistral AI’s foundation models. Mistral AI also offers Le Chat Enterprise, a service designed for businesses to “empower your team’s productivity with self-deployment capacities, and fine-grained moderation mechanisms.””

“As of April 2024, notable Mistral AI customers include Lamini, Arcane, Lindy, Hugging Face, Brave, Cloudflare, Pretto, BNP Paribas, Orange, and MongoDB.”

Friday, Aug. 23rd: I read a portrait on Alex Karp who is the CEO at Palantir. - NYT, Arny Trezzi

Alex Karp, CEO of Palantir, is an unconventional tech leader with a deep philosophical streak, often contemplating the moral implications of technology.

Palantir, under Karp’s leadership, has grown into a controversial powerhouse, known for its work with government agencies, including the military and intelligence sectors.

Karp’s approach to leadership and technology is marked by a blend of skepticism toward Silicon Valley norms and a commitment to addressing complex global issues.

“I’m not running this company to be loved. I’m running it to keep the West safe.”

“If you want to be liked, this is the wrong place. If you want to solve complex problems, welcome aboard.”

“I don’t fit the Silicon Valley mold, but maybe that’s why Palantir works.”

“I’d rather be misunderstood for doing the right thing than celebrated for following the crowd.”

“We’re in the business of providing clarity in a world full of chaos.”

“The only solution to stop A.I. abuse is to use A.I.”

“In some ways, Alex doesn’t look like a salesperson from central casting you would send to the C.I.A. The formulation I always have is that if you’re trying to sell something to somebody, the basic paradox is you have to be just like them, so they can trust you — but you have to be very different from them so that they think you have something they don’t have.”

Saturday, Aug. 24th: Bessemer published its annual Cloud 100 benchmark. - Bessemer

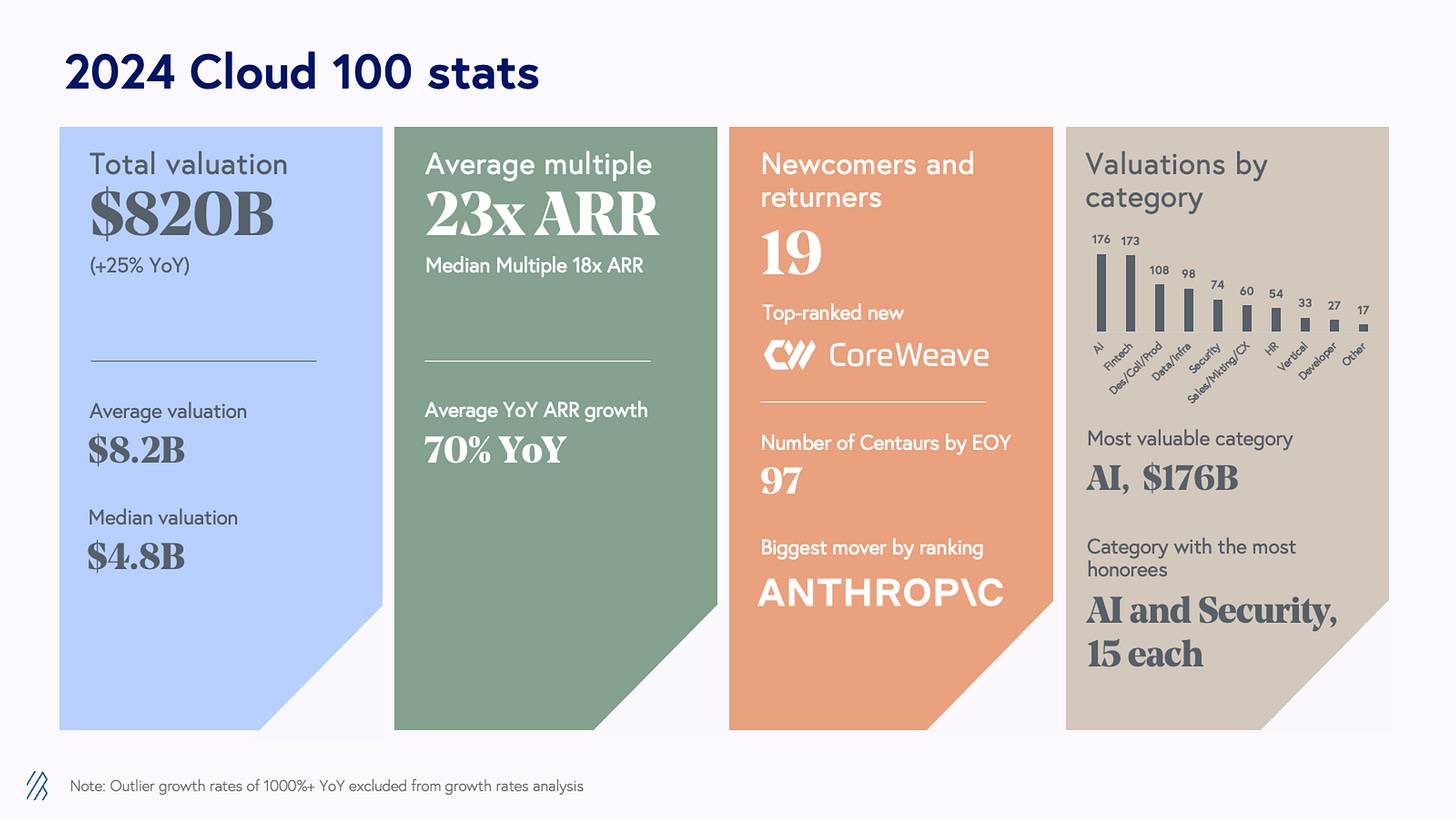

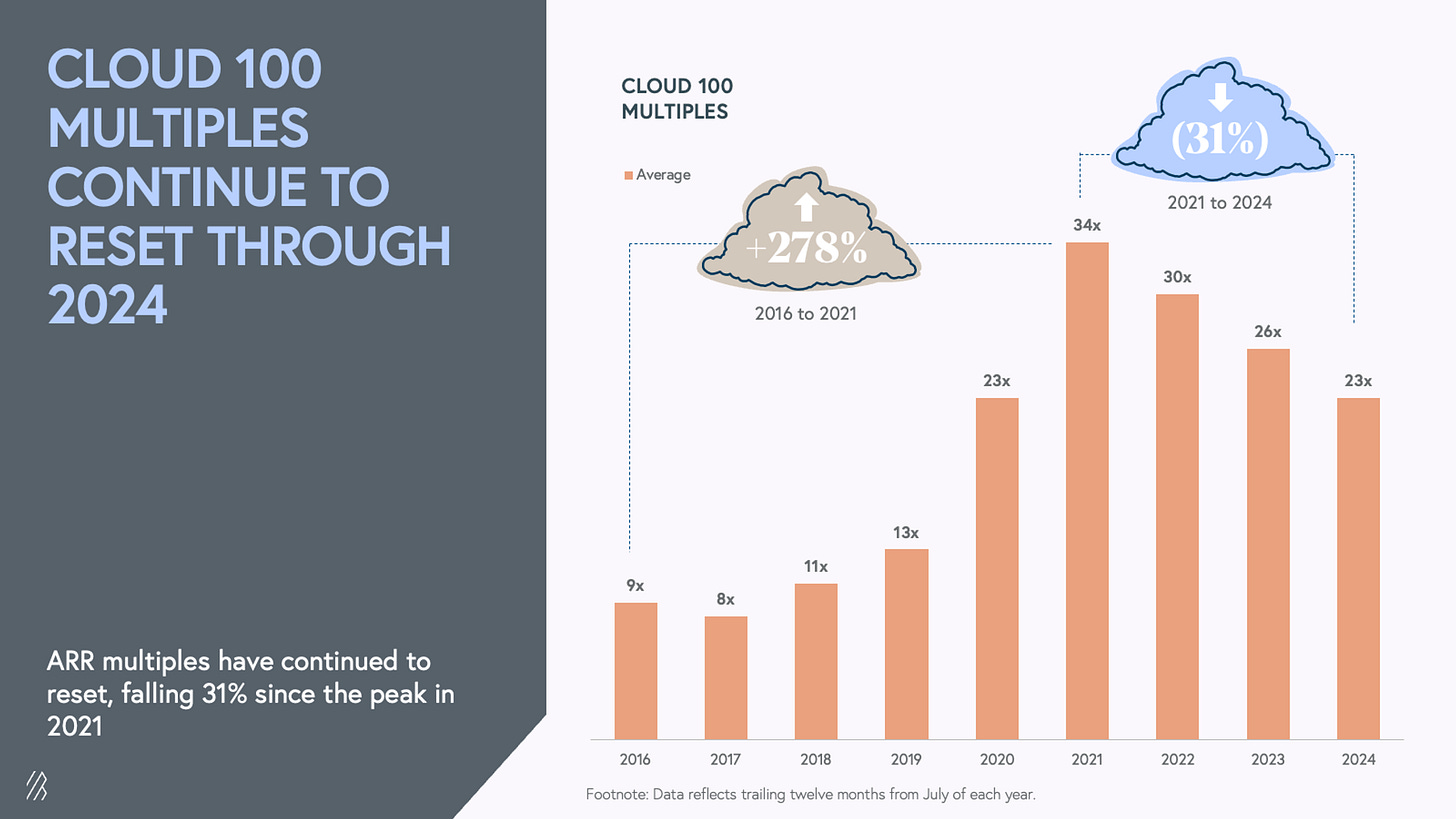

“The Cloud 100 list value reached $820bn this year, a +25% YoY increase and the highest list value ever in Cloud 100 history (compared with the previous record of $738bn set by the 2022 Cloud 100 cohort).”

“Even as companies have faced a challenging macroeconomic environment in the past few years, the time it took the average Cloud 100 company to reach Centaur status—crossing $100m ARR—has decreased consistently since the list’s inception. It took the average 2024 Cloud 100 company under eight years to reach Centaur status, down from ten years for the inaugural 2016 Cloud 100 cohort. AI companies in particular have shown their ability to scale faster than others, averaging just over six years for this year’s cohort.”

“97% of honourees are forecasted to reach or surpass $100 million of ARR by the end of the year (vs. 95% in 2023).”

Sunday, Aug. 25th: Matthew Ball wrote about Roblox. The company is evolving from a pure gaming platform to a broader virtual ecosystem integrating new technologies like AI and VR. - Matthew Ball

Roblox’s revenue grew to $2.2bn in 2023, reflecting a 16% YoY increase, highlighting its sustained growth.

The platform saw an increase in DAUs to 65.6m, marking an 18% rise compared to the previous year.

Users spent over 50bn hours on Roblox in 2023, a testament to its high engagement levels.

54% of Roblox users are now over 13, showing the platform’s appeal beyond younger audiences.

Roblox paid out $710m to its developers in 2023, underlining the growing creator economy within the platform.

“On a monthly basis, Roblox now counts more than 380m users according to RTrack – 2x as many as PC gaming leader Steam, 3x that of Sony’s PlayStation, 3x the number of unique annual users of the Nintendo Switch in a year, and 5x as many as have bought an Xbox console in the last decade.”

“Obviously, Roblox has a costs problem. Over the last twelve months it has averaged $138 in costs for every $100 in revenue.”

“Roblox is increasingly focused on using GenAI to provide real-time player communications (e.g., transcription and translation), as well as power asset and world creation, and operate AI agents and NPCs – none of which is cheap to create or operate.”

“Governments around the world are now forcing Apple’s iOS platform and Google’s Android to open up their app distribution and monetization models, at least in part (it’s also no coincidence that Epic Games, which operates a rival to Roblox, has been leading various legal efforts against both Apple and Google on exactly this issue). The specifics vary by market, by platform, and sometimes type of transaction. There’s not yet reliable data to suggest the degree to which Roblox might save on commissions (at a minimum, they will substitute a 30% fee with a 4% credit card processing expense, but additional iOS/Android fees might also apply), and the more complicated purchasing flow may require discounts and/or lead to fewer transactions, which would harm revenue. Accordingly, the net opportunity may be a reduction from 25% to 20% in the medium term, with a longer-term possibility of something closer to 10%.”

“In the average month, fewer than 6% of users and 17% of average DAUs purchase Robux.”

“Smaller App Store payments, more ARPU, and a bigger ad platform are the linear opportunities for Roblox.”

Monday, Aug. 26th: Alex Kolicich wrote about AI and the defense industry. - Alex Kolicich

“I've been contemplating how the unique optimism and rapid expansion of AI capabilities we've witnessed over the past year and a half are juxtaposed with danger and uncertainty in the investment world.”

“As I mentioned last time, AI valuations are fully priced, but the value capture remains uncertain.”

“Revenue is being generated, but it's not sufficient. What's uniquely missing is gross margin and defensibility. Many companies in AI are operating with low gross margins in highly competitive spaces relative to near-term demand.”

“The market, however, seems to price the view that cloud software companies will benefit from AI at nearly zero. I've shown this chart many times, but cloud software revenue multiples are trading close to near-term lows. This stands in contrast to a huge boom in AI stocks like NVIDIA, ASML, PLTR, etc.”

“When we discussed investing in the Anduril Series A at 8VC in 2018, there was significant consternation in the tech ecosystem on doing work in defense. It was around this time that Google famously dumped their Project Maven DOD program due to employee unrest, which gives a sense of the strong sentiment against supporting defense innovation in Silicon Valley at the time.”

“The Department of Defense (DoD) has moved from often waiting twenty years for a single solution that fit the traditional mold to engaging with the country's most talented technology players in iterative processes.”

Tuesday, Aug. 27th: Felicis wrote about the physical design software market. - Felicis

“Shortly after, the PC was released as well, although it took time for the performance of PC semiconductors to support CAD software. This opened the door for early software companies to only develop software and not worry about hardware development.”

“From 1981 to 1986, six of today’s oligopolistic design companies were founded: Mentor Graphics (1981), Autodesk (1982), Cadence (1983), Bentley Systems (1984), PTC (1985), and Synopsys (1986). These companies made design software specifically for workstations and/or PCs, creating a cheaper and more flexible alternative to the incumbents.”

“The industry hasn’t seen major changes since the 1980s. Companies have switched pricing models and have started to migrate to cloud-based versions of products, but the industry structure has remained mostly constant.“

“The moats of the incumbents are so deep and the switching costs have such a high risk that this market has been mostly impenetrable.”

Wednesday, Aug. 28th: Daniel Kimerling at Deciens wrote a post on alignment in venture capital. It critiques industry’s short-term focus where companies repeatedly raise and burn capital without achieving sustainable growth. It advocates for a long-term investment approach, aligning venture capital with founders’ and LPs’ interests. The piece suggests that VC firms should prioritize building durable businesses and compounding value over time rather than chasing quick returns through constant fundraising cycles. True alignment and long-term partnerships are proposed as solutions to the current VC industry’s challenges. - Deciens

“By the time all of the assets are distributed and funds wound down, a venture capital fund’s actual length may very well be 15-20 years.”

“The enduring nature of LP capital and the way that VC funds are structured are in stark contrast to the way that most VC-backed companies operate.”